Solution for 2d 2 3 3

Diffusion equations¶

The famous diffusion equation, also known as the heat equation, reads

\[\frac{\partial u}{\partial t} = {\alpha} \frac{\partial^2 u}{\partial x^2},\]

where \(u(x,t)\) is the unknown function to be solved for, \(x\) is a coordinate in space, and \(t\) is time. The coefficient \({\alpha}\) is the diffusion coefficient and determines how fast \(u\) changes in time. A quick short form for the diffusion equation is \(u_t = {\alpha} u_{xx}\).

Compared to the wave equation, \(u_{tt}=c^2u_{xx}\), which looks very similar, the diffusion equation features solutions that are very different from those of the wave equation. Also, the diffusion equation makes quite different demands to the numerical methods.

Typical diffusion problems may experience rapid change in the very beginning, but then the evolution of \(u\) becomes slower and slower. The solution is usually very smooth, and after some time, one cannot recognize the initial shape of \(u\). This is in sharp contrast to solutions of the wave equation where the initial shape is preserved in homogeneous media - the solution is then basically a moving initial condition. The standard wave equation \(u_{tt}=c^2u_{xx}\) has solutions that propagates with speed \(c\) forever, without changing shape, while the diffusion equation converges to a stationary solution \(\bar u(x)\) as \(t\rightarrow\infty\). In this limit, \(u_t=0\), and \(\bar u\) is governed by \(\bar u''(x)=0\). This stationary limit of the diffusion equation is called the Laplace equation and arises in a very wide range of applications throughout the sciences.

It is possible to solve for \(u(x,t)\) using an explicit scheme, as we do in the section An explicit method for the 1D diffusion equation, but the time step restrictions soon become much less favorable than for an explicit scheme applied to the wave equation. And of more importance, since the solution \(u\) of the diffusion equation is very smooth and changes slowly, small time steps are not convenient and not required by accuracy as the diffusion process converges to a stationary state. Therefore, implicit schemes (as described in the section Implicit methods for the 1D diffusion equation) are popular, but these require solutions of systems of algebraic equations. We shall use ready-made software for this purpose, but also program some simple iterative methods. The exposition is, as usual in this book, very basic and focuses on the basic ideas and how to implement. More comprehensive mathematical treatments and classical analysis of the methods are found in lots of textbooks. A favorite of ours in this respect is the one by LeVeque [Ref07]. The books by Strikwerda [Ref08] and by Lapidus and Pinder [Ref09] are also highly recommended as additional material on the topic.

An explicit method for the 1D diffusion equation¶

Explicit finite difference methods for the wave equation \(u_{tt}=c^2u_{xx}\) can be used, with small modifications, for solving \(u_t = {\alpha} u_{xx}\) as well. The exposition below assumes that the reader is familiar with the basic ideas of discretization and implementation of wave equations from the chapter Wave equations. Readers not familiar with the Forward Euler, Backward Euler, and Crank-Nicolson (or centered or midpoint) discretization methods in time should consult, e.g., the section Finite difference methods in [Ref02].

The initial-boundary value problem for 1D diffusion¶

To obtain a unique solution of the diffusion equation, or equivalently, to apply numerical methods, we need initial and boundary conditions. The diffusion equation goes with one initial condition \(u(x,0)=I(x)\), where \(I\) is a prescribed function. One boundary condition is required at each point on the boundary, which in 1D means that \(u\) must be known, \(u_x\) must be known, or some combination of them.

We shall start with the simplest boundary condition: \(u=0\). The complete initial-boundary value diffusion problem in one space dimension can then be specified as

\[\tag{349} \frac{\partial u}{\partial t} = {\alpha} \frac{\partial^2 u}{\partial x^2} + f, \quad x\in (0,L),\ t\in (0,T]\]

\[\tag{350} u(x,0) = I(x), \quad x\in [0,L]\]

\[\tag{351} u(0,t) = 0, \quad t>0,\]

\[\tag{352} u(L,t) = 0, \quad t>0{\thinspace .}\]

With only a first-order derivative in time, only one initial condition is needed, while the second-order derivative in space leads to a demand for two boundary conditions. We have added a source term \(f=f(x,t)\), which is convenient when testing implementations.

Diffusion equations like (349) have a wide range of applications throughout physical, biological, and financial sciences. One of the most common applications is propagation of heat, where \(u(x,t)\) represents the temperature of some substance at point \(x\) and time \(t\). Other applications are listed in the section Applications.

Forward Euler scheme¶

The first step in the discretization procedure is to replace the domain \([0,L]\times [0,T]\) by a set of mesh points. Here we apply equally spaced mesh points

\[x_i=i\Delta x,\quad i=0,\ldots,N_x,\]

and

\[t_n=n\Delta t,\quad n=0,\ldots,N_t {\thinspace .}\]

Moreover, \(u^n_i\) denotes the mesh function that approximates \(u(x_i,t_n)\) for \(i=0,\ldots,N_x\) and \(n=0,\ldots,N_t\). Requiring the PDE (349) to be fulfilled at a mesh point \((x_i,t_n)\) leads to the equation

\[\tag{353} \frac{\partial}{\partial t} u(x_i, t_n) = {\alpha}\frac{\partial^2}{\partial x^2} u(x_i, t_n) + f(x_i,t_n),\]

The next step is to replace the derivatives by finite difference approximations. The computationally simplest method arises from using a forward difference in time and a central difference in space:

\[\tag{354} [D_t^+ u = {\alpha} D_xD_x u + f]^n_i {\thinspace .}\]

Written out,

\[\tag{355} \frac{u^{n+1}_i-u^n_i}{\Delta t} = {\alpha} \frac{u^{n}_{i+1} - 2u^n_i + u^n_{i-1}}{\Delta x^2} + f_i^n{\thinspace .}\]

We have turned the PDE into algebraic equations, also often called discrete equations. The key property of the equations is that they are algebraic, which makes them easy to solve. As usual, we anticipate that \(u^n_i\) is already computed such that \(u^{n+1}_i\) is the only unknown in (355). Solving with respect to this unknown is easy:

\[\tag{356} u^{n+1}_i = u^n_i + F\left( u^{n}_{i+1} - 2u^n_i + u^n_{i-1}\right) + \Delta t f_i^n,\]

where we have introduced the mesh Fourier number:

\[\tag{357} F = {\alpha}\frac{\Delta t}{\Delta x^2}{\thinspace .}\]

\(F\) is the key parameter in the discrete diffusion equation

Note that \(F\) is a dimensionless number that lumps the key physical parameter in the problem, \({\alpha}\), and the discretization parameters \(\Delta x\) and \(\Delta t\) into a single parameter. Properties of the numerical method are critically dependent upon the value of \(F\) (see the section Analysis of schemes for the diffusion equation for details).

The computational algorithm then becomes

- compute \(u^0_i=I(x_i)\) for \(i=0,\ldots,N_x\)

- for \(n=0,1,\ldots,N_t\):

- apply (356) for all the internal spatial points \(i=1,\ldots,N_x-1\)

- set the boundary values \(u^{n+1}_i=0\) for \(i=0\) and \(i=N_x\)

The algorithm is compactly and fully specified in Python:

import numpy as np x = np . linspace ( 0 , L , Nx + 1 ) # mesh points in space dx = x [ 1 ] - x [ 0 ] t = np . linspace ( 0 , T , Nt + 1 ) # mesh points in time dt = t [ 1 ] - t [ 0 ] F = a * dt / dx ** 2 u = np . zeros ( Nx + 1 ) # unknown u at new time level u_n = np . zeros ( Nx + 1 ) # u at the previous time level # Set initial condition u(x,0) = I(x) for i in range ( 0 , Nx + 1 ): u_n [ i ] = I ( x [ i ]) for n in range ( 0 , Nt ): # Compute u at inner mesh points for i in range ( 1 , Nx ): u [ i ] = u_n [ i ] + F * ( u_n [ i - 1 ] - 2 * u_n [ i ] + u_n [ i + 1 ]) + \ dt * f ( x [ i ], t [ n ]) # Insert boundary conditions u [ 0 ] = 0 ; u [ Nx ] = 0 # Update u_n before next step u_n [:] = u

Note that we use a for \({\alpha}\) in the code, motivated by easy visual mapping between the variable name and the mathematical symbol in formulas.

We need to state already now that the shown algorithm does not produce meaningful results unless \(F\leq 1/2\). Why is explained in the section Analysis of schemes for the diffusion equation.

Implementation¶

The file diffu1D_u0.py contains a complete function solver_FE_simple for solving the 1D diffusion equation with \(u=0\) on the boundary as specified in the algorithm above:

import numpy as np def solver_FE_simple ( I , a , f , L , dt , F , T ): """ Simplest expression of the computational algorithm using the Forward Euler method and explicit Python loops. For this method F <= 0.5 for stability. """ import time ; t0 = time . clock () # For measuring the CPU time Nt = int ( round ( T / float ( dt ))) t = np . linspace ( 0 , Nt * dt , Nt + 1 ) # Mesh points in time dx = np . sqrt ( a * dt / F ) Nx = int ( round ( L / dx )) x = np . linspace ( 0 , L , Nx + 1 ) # Mesh points in space # Make sure dx and dt are compatible with x and t dx = x [ 1 ] - x [ 0 ] dt = t [ 1 ] - t [ 0 ] u = np . zeros ( Nx + 1 ) u_n = np . zeros ( Nx + 1 ) # Set initial condition u(x,0) = I(x) for i in range ( 0 , Nx + 1 ): u_n [ i ] = I ( x [ i ]) for n in range ( 0 , Nt ): # Compute u at inner mesh points for i in range ( 1 , Nx ): u [ i ] = u_n [ i ] + F * ( u_n [ i - 1 ] - 2 * u_n [ i ] + u_n [ i + 1 ]) + \ dt * f ( x [ i ], t [ n ]) # Insert boundary conditions u [ 0 ] = 0 ; u [ Nx ] = 0 # Switch variables before next step #u_n[:] = u # safe, but slow u_n , u = u , u_n t1 = time . clock () return u_n , x , t , t1 - t0 # u_n holds latest u

A faster version, based on vectorization of the finite difference scheme, is available in the function solver_FE . The vectorized version replaces the explicit loop

for i in range ( 1 , Nx ): u [ i ] = u_n [ i ] + F * ( u_n [ i - 1 ] - 2 * u_n [ i ] + u_n [ i + 1 ]) \ + dt * f ( x [ i ], t [ n ])

by arithmetics on displaced slices of the u array:

u [ 1 : Nx ] = u_n [ 1 : Nx ] + F * ( u_n [ 0 : Nx - 1 ] - 2 * u_n [ 1 : Nx ] + u_n [ 2 : Nx + 1 ]) \ + dt * f ( x [ 1 : Nx ], t [ n ]) # or u [ 1 : - 1 ] = u_n [ 1 : - 1 ] + F * ( u_n [ 0 : - 2 ] - 2 * u_n [ 1 : - 1 ] + u_n [ 2 :]) \ + dt * f ( x [ 1 : - 1 ], t [ n ])

For example, the vectorized version runs 70 times faster than the scalar version in a case with 100 time steps and a spatial mesh of \(10^5\) cells.

The solver_FE function also features a callback function such that the user can process the solution at each time level. The callback function looks like user_action(u, x, t, n) , where u is the array containing the solution at time level n , x holds all the spatial mesh points, while t holds all the temporal mesh points. Apart from the vectorized loop over the spatial mesh points, the callback function, and a bit more complicated setting of the source f it is not specified ( None ), the solver_FE function is identical to solver_FE_simple above:

def solver_FE ( I , a , f , L , dt , F , T , user_action = None , version = 'scalar' ): """ Vectorized implementation of solver_FE_simple. """ import time ; t0 = time . clock () # for measuring the CPU time Nt = int ( round ( T / float ( dt ))) t = np . linspace ( 0 , Nt * dt , Nt + 1 ) # Mesh points in time dx = np . sqrt ( a * dt / F ) Nx = int ( round ( L / dx )) x = np . linspace ( 0 , L , Nx + 1 ) # Mesh points in space # Make sure dx and dt are compatible with x and t dx = x [ 1 ] - x [ 0 ] dt = t [ 1 ] - t [ 0 ] u = np . zeros ( Nx + 1 ) # solution array u_n = np . zeros ( Nx + 1 ) # solution at t-dt # Set initial condition for i in range ( 0 , Nx + 1 ): u_n [ i ] = I ( x [ i ]) if user_action is not None : user_action ( u_n , x , t , 0 ) for n in range ( 0 , Nt ): # Update all inner points if version == 'scalar' : for i in range ( 1 , Nx ): u [ i ] = u_n [ i ] +\ F * ( u_n [ i - 1 ] - 2 * u_n [ i ] + u_n [ i + 1 ]) +\ dt * f ( x [ i ], t [ n ]) elif version == 'vectorized' : u [ 1 : Nx ] = u_n [ 1 : Nx ] + \ F * ( u_n [ 0 : Nx - 1 ] - 2 * u_n [ 1 : Nx ] + u_n [ 2 : Nx + 1 ]) +\ dt * f ( x [ 1 : Nx ], t [ n ]) else : raise ValueError ( 'version= %s ' % version ) # Insert boundary conditions u [ 0 ] = 0 ; u [ Nx ] = 0 if user_action is not None : user_action ( u , x , t , n + 1 ) # Switch variables before next step u_n , u = u , u_n t1 = time . clock () return t1 - t0

Verification¶

Exact solution of discrete equations¶

Before thinking about running the functions in the previous section, we need to construct a suitable test example for verification. It appears that a manufactured solution that is linear in time and at most quadratic in space fulfills the Forward Euler scheme exactly. With the restriction that \(u=0\) for \(x=0,L\), we can try the solution

\[u(x,t) = 5tx(L-x){\thinspace .}\]

Inserted in the PDE, it requires a source term

\[f(x,t) = 10{\alpha} t + 5x(L-x){\thinspace .}\]

With the formulas from Finite differences of we can easily check that the manufactured u fulfills the scheme:

\[\begin{split}\begin{align*} \lbrack D_t^+ u = {\alpha} D_x D_x u + f\rbrack^n_i &= \lbrack 5x(L-x)D_t^+ t = 5 t{\alpha} D_x D_x (xL-x^2) +\\ &\quad\quad 10{\alpha} t + 5x(L-x)\rbrack^n_i\\ &= \lbrack 5x(L-x) = 5 t{\alpha} (-2) + 10{\alpha} t + 5x(L-x) \rbrack^n_i, \end{align*}\end{split}\]

which is a 0=0 expression. The computation of the source term, given any \(u\), is easily automated with sympy :

import sympy as sym x , t , a , L = sym . symbols ( 'x t a L' ) u = x * ( L - x ) * 5 * t def pde ( u ): return sym . diff ( u , t ) - a * sym . diff ( u , x , x ) f = sym . simplify ( pde ( u ))

Now we can choose any expression for u and automatically get the suitable source term f . However, the manufactured solution u will in general not be exactly reproduced by the scheme: only constant and linear functions are differentiated correctly by a forward difference, while only constant, linear, and quadratic functions are differentiated exactly by a \([D_xD_x u]^n_i\) difference.

The numerical code will need to access the u and f above as Python functions. The exact solution is wanted as a Python function u_exact(x, t) , while the source term is wanted as f(x, t) . The parameters a and L in u and f above are symbols and must be replaced by float objects in a Python function. This can be done by redefining a and L as float objects and performing substitutions of symbols by numbers in u and f . The appropriate code looks like this:

a = 0.5 L = 1.5 u_exact = sym . lambdify ( [ x , t ], u . subs ( 'L' , L ) . subs ( 'a' , a ), modules = 'numpy' ) f = sym . lambdify ( [ x , t ], f . subs ( 'L' , L ) . subs ( 'a' , a ), modules = 'numpy' ) I = lambda x : u_exact ( x , 0 )

Here we also make a function I for the initial condition.

The idea now is that our manufactured solution should be exactly reproduced by the code (to machine precision). For this purpose we make a test function for comparing the exact and numerical solutions at the end of the time interval:

def test_solver_FE (): # Define u_exact, f, I as explained above dx = L / 3 # 3 cells F = 0.5 dt = F * dx ** 2 u , x , t , cpu = solver_FE_simple ( I = I , a = a , f = f , L = L , dt = dt , F = F , T = 2 ) u_e = u_exact ( x , t [ - 1 ]) diff = abs ( u_e - u ) . max () tol = 1E-14 assert diff < tol , 'max diff solver_FE_simple: %g ' % diff u , x , t , cpu = solver_FE ( I = I , a = a , f = f , L = L , dt = dt , F = F , T = 2 , user_action = None , version = 'scalar' ) u_e = u_exact ( x , t [ - 1 ]) diff = abs ( u_e - u ) . max () tol = 1E-14 assert diff < tol , 'max diff solver_FE, scalar: %g ' % diff u , x , t , cpu = solver_FE ( I = I , a = a , f = f , L = L , dt = dt , F = F , T = 2 , user_action = None , version = 'vectorized' ) u_e = u_exact ( x , t [ - 1 ]) diff = abs ( u_e - u ) . max () tol = 1E-14 assert diff < tol , 'max diff solver_FE, vectorized: %g ' % diff

The critical value \(F=0.5\)

We emphasize that the value F=0.5 is critical: the tests above will fail if F has a larger value. This is because the Forward Euler scheme is unstable for \(F>1/2\).

The reader may wonder if \(F=1/2\) is safe or if \(F<1/2\) should be required. Experiments show that \(F=1/2\) works fine for \(u_t={\alpha} u_{xx}\), so there is no accumulation of rounding errors in this case and hence no need to introduce any safety factor to keep \(F\) away from the limiting value 0.5.

Checking convergence rates¶

If our chosen exact solution does not satisfy the discrete equations exactly, we are left with checking the convergence rates, just as we did previously for the wave equation. However, with the Euler scheme here, we have different accuracies in time and space, since we use a second order approximation to the spatial derivative and a first order approximation to the time derivative. Thus, we must expect different convergence rates in time and space. For the numerical error,

\[E = C_t\Delta t^r + C_x\Delta x^p,\]

we should get convergence rates \(r=1\) and \(p=2\) (\(C_t\) and \(C_x\) are unknown constants). As previously, in the section Manufactured solution and estimation of convergence rates, we simplify matters by introducing a single discretization parameter \(h\):

\[h = \Delta t,\quad \Delta x = Kh^{r/p},\]

where \(K\) is any constant. This allows us to factor out only one discretization parameter \(h\) from the formula:

\[E = C_t h + C_x (K^{r/p})^p = \tilde C h^r,\quad \tilde C = C_t + C_sK^r{\thinspace .}\]

The computed rate \(r\) should approach 1 with increasing resolution.

It is tempting, for simplicity, to choose \(K=1\), which gives \(\Delta x = h^{r/p}\), expected to be \(\sqrt{\Delta t}\). However, we have to control the stability requirement: \(F\leq\frac{1}{2}\), which means

\[\frac{{\alpha}\Delta t}{\Delta x^2}\leq\frac{1}{2}\quad\Rightarrow \quad \Delta x \geq \sqrt{2{\alpha}}h^{1/2} ,\]

implying that \(K=\sqrt{2{\alpha}}\) is our choice in experiments where we lie on the stability limit \(F=1/2\).

Numerical experiments¶

When a test function like the one above runs silently without errors, we have some evidence for a correct implementation of the numerical method. The next step is to do some experiments with more interesting solutions.

We target a scaled diffusion problem where \(x/L\) is a new spatial coordinate and \({\alpha} t/L^2\) is a new time coordinate. The source term \(f\) is omitted, and \(u\) is scaled by \(\max_{x\in [0,L]}|I(x)|\) (see Scaling of the diffusion equation [Ref03] for details). The governing PDE is then

\[\frac{\partial u}{\partial t} = \frac{\partial^2 u}{\partial x^2},\]

in the spatial domain \([0,L]\), with boundary conditions \(u(0)=u(1)=0\). Two initial conditions will be tested: a discontinuous plug,

\[\begin{split}I(x) = \left\lbrace\begin{array}{ll} 0, & |x-L/2| > 0.1\\ 1, & \hbox{otherwise} \end{array}\right.\end{split}\]

and a smooth Gaussian function,

\[I(x) = e^{-\frac{1}{2\sigma^2}(x-L/2)^2}{\thinspace .}\]

The functions plug and gaussian in diffu1D_u0.py run the two cases, respectively:

def plug ( scheme = 'FE' , F = 0.5 , Nx = 50 ): L = 1. a = 1. T = 0.1 # Compute dt from Nx and F dx = L / Nx ; dt = F / a * dx ** 2 def I ( x ): """Plug profile as initial condition.""" if abs ( x - L / 2.0 ) > 0.1 : return 0 else : return 1 cpu = viz ( I , a , L , dt , F , T , umin =- 0.1 , umax = 1.1 , scheme = scheme , animate = True , framefiles = True ) print 'CPU time:' , cpu def gaussian ( scheme = 'FE' , F = 0.5 , Nx = 50 , sigma = 0.05 ): L = 1. a = 1. T = 0.1 # Compute dt from Nx and F dx = L / Nx ; dt = F / a * dx ** 2 def I ( x ): """Gaussian profile as initial condition.""" return exp ( - 0.5 * (( x - L / 2.0 ) ** 2 ) / sigma ** 2 ) u , cpu = viz ( I , a , L , dt , F , T , umin =- 0.1 , umax = 1.1 , scheme = scheme , animate = True , framefiles = True ) print 'CPU time:' , cpu

These functions make use of the function viz for running the solver and visualizing the solution using a callback function with plotting:

def viz ( I , a , L , dt , F , T , umin , umax , scheme = 'FE' , animate = True , framefiles = True ): def plot_u ( u , x , t , n ): plt . plot ( x , u , 'r-' , axis = [ 0 , L , umin , umax ], title = 't= %f ' % t [ n ]) if framefiles : plt . savefig ( 'tmp_frame %04d .png' % n ) if t [ n ] == 0 : time . sleep ( 2 ) elif not framefiles : # It takes time to write files so pause is needed # for screen only animation time . sleep ( 0.2 ) user_action = plot_u if animate else lambda u , x , t , n : None cpu = eval ( 'solver_' + scheme )( I , a , L , dt , F , T , user_action = user_action ) return cpu

Notice that this viz function stores all the solutions in a list solutions in the callback function. Modern computers have hardly any problem with storing a lot of such solutions for moderate values of \(N_x\) in 1D problems, but for 2D and 3D problems, this technique cannot be used and solutions must be stored in files.

Our experiments employ a time step \(\Delta t = 0.0002\) and simulate for \(t\in [0,0.1]\). First we try the highest value of \(F\): \(F=0.5\). This resolution corresponds to \(N_x=50\). A possible terminal command is

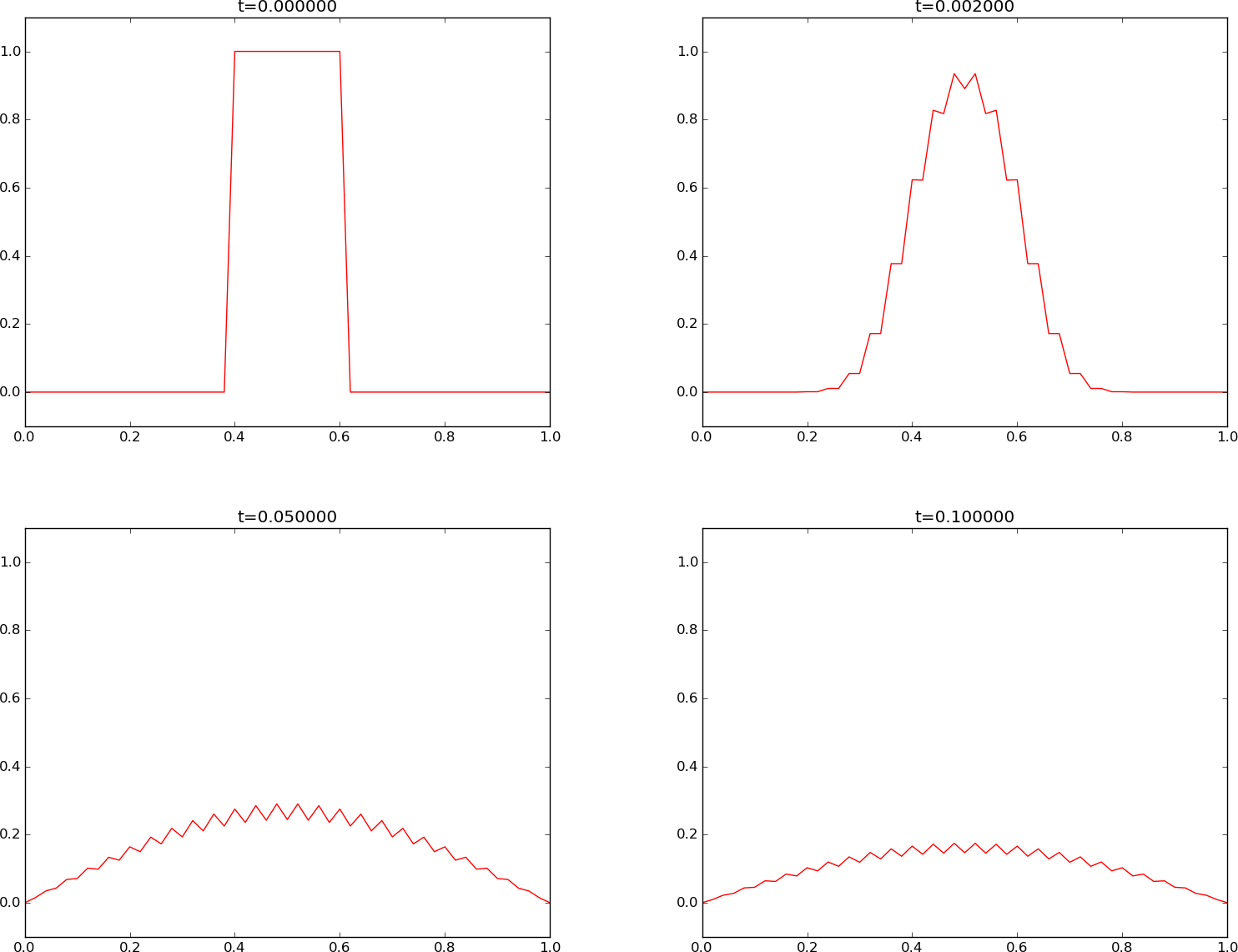

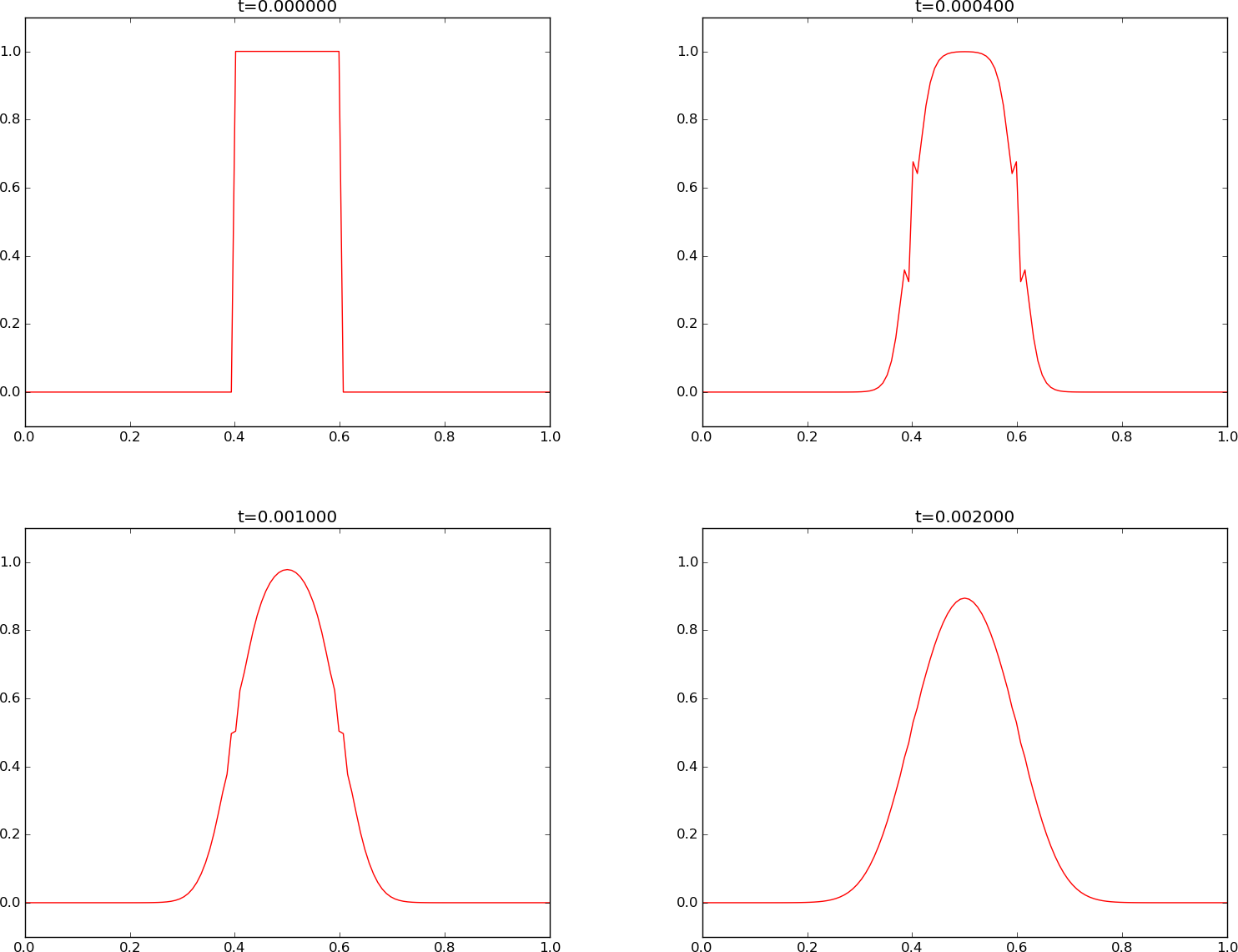

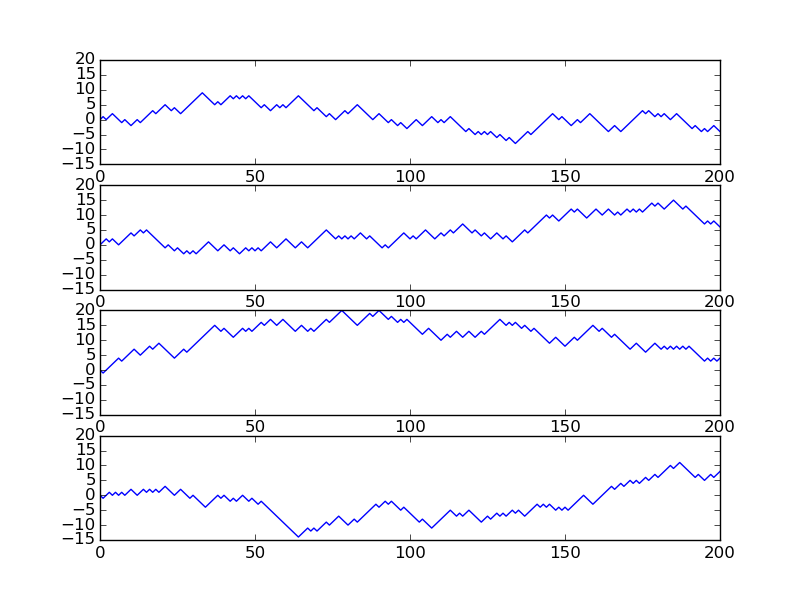

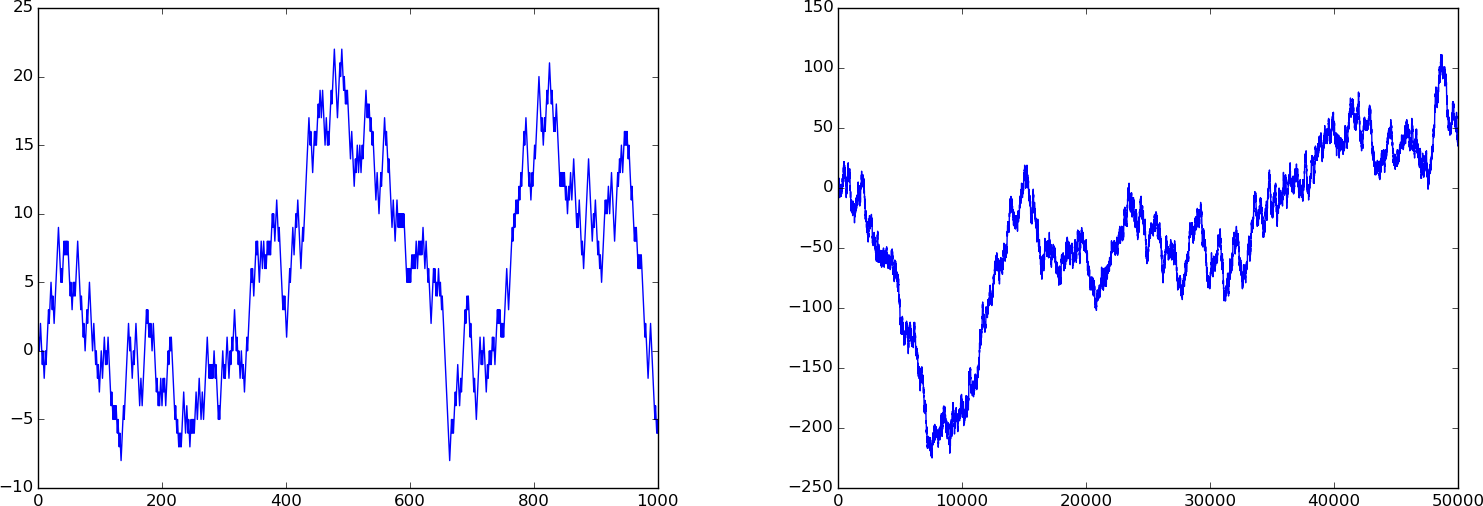

Terminal> python -c 'from diffu1D_u0 import gaussian gaussian("solver_FE", F=0.5, dt=0.0002)' The \(u(x,t)\) curve as a function of \(x\) is shown in Figure Forward Euler scheme for at four time levels.

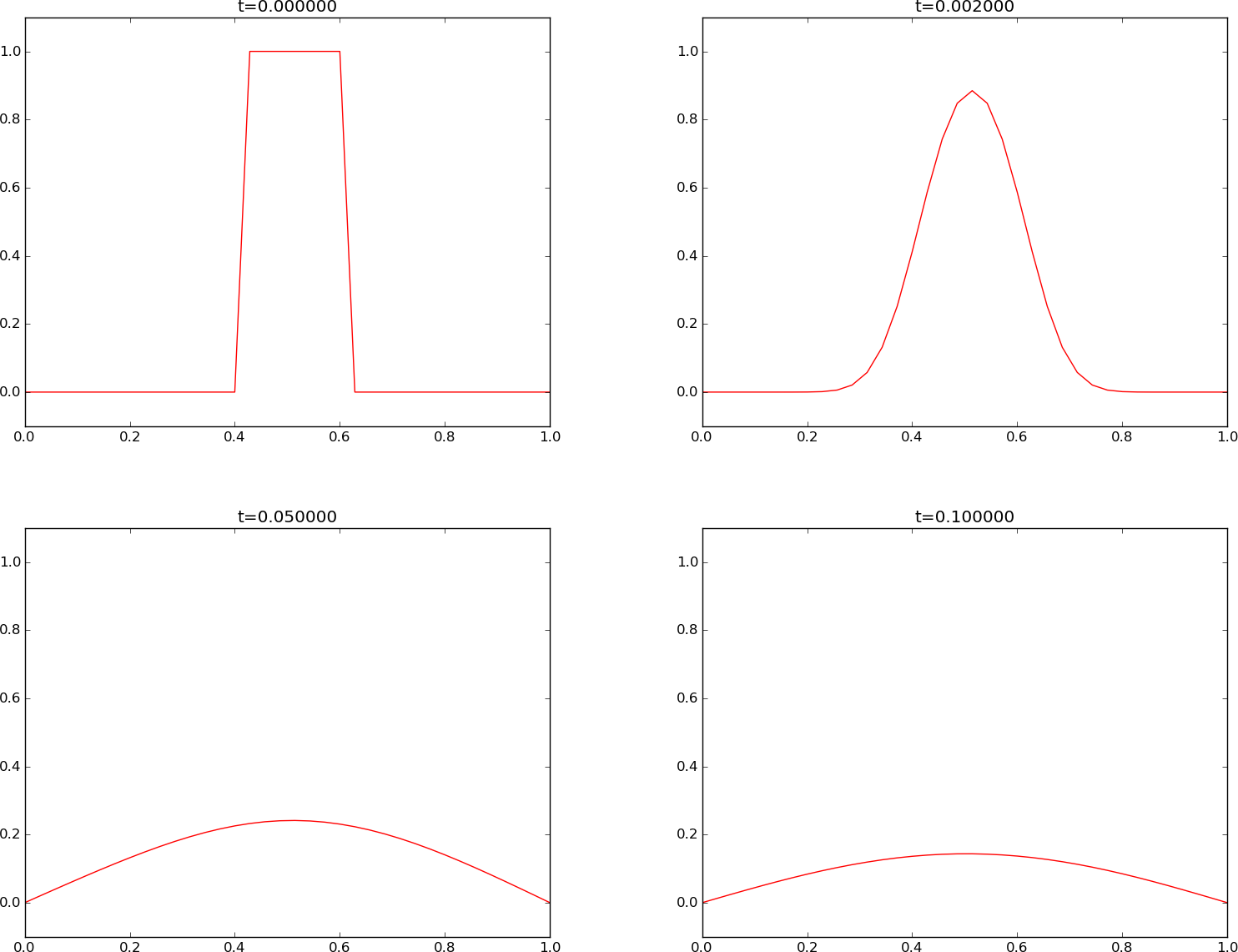

We see that the curves have saw-tooth waves in the beginning of the simulation. This non-physical noise is smoothed out with time, but solutions of the diffusion equations are known to be smooth, and this numerical solution is definitely not smooth. Lowering \(F\) helps: \(F\leq 0.25\) gives a smooth solution, see Figure Forward Euler scheme for .

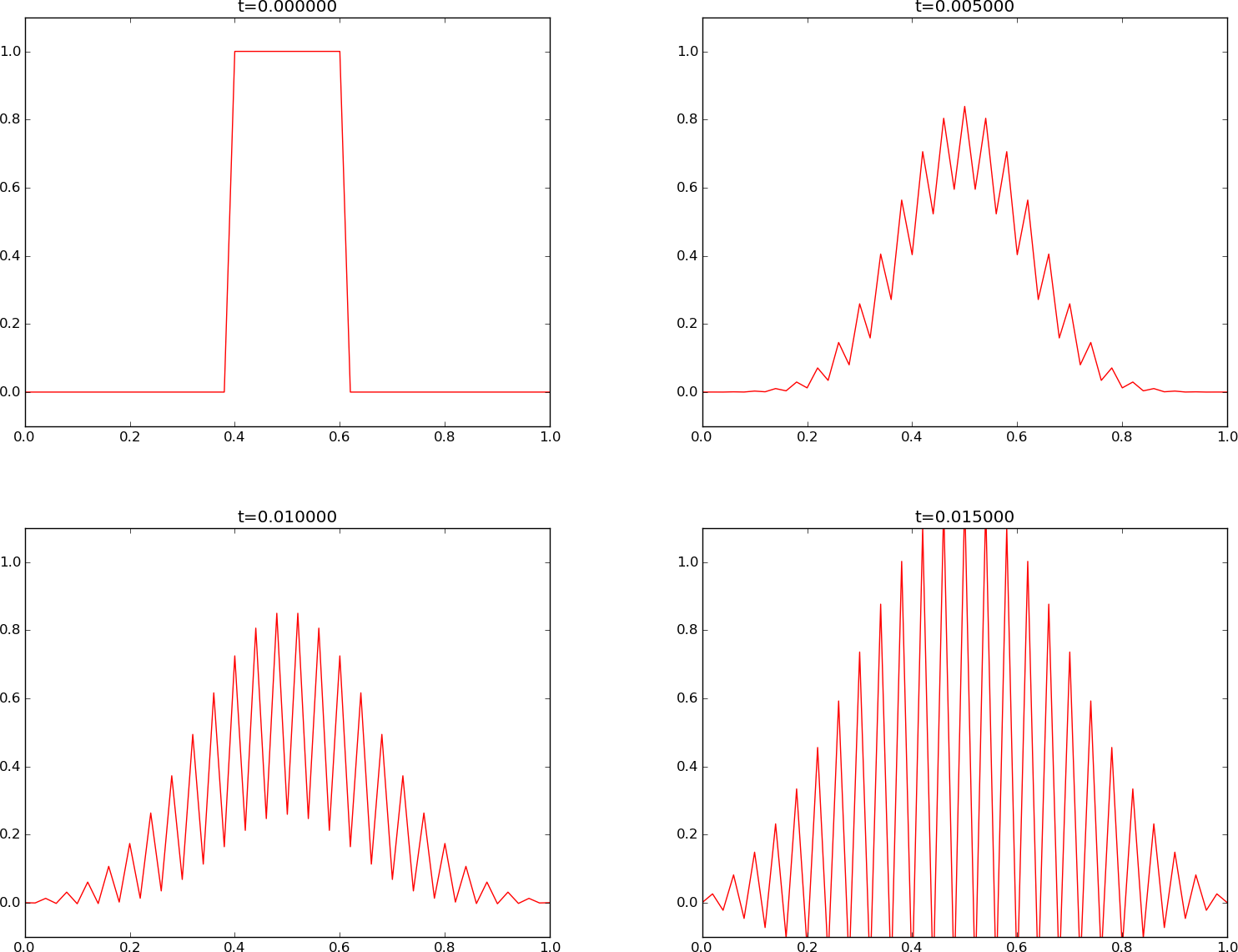

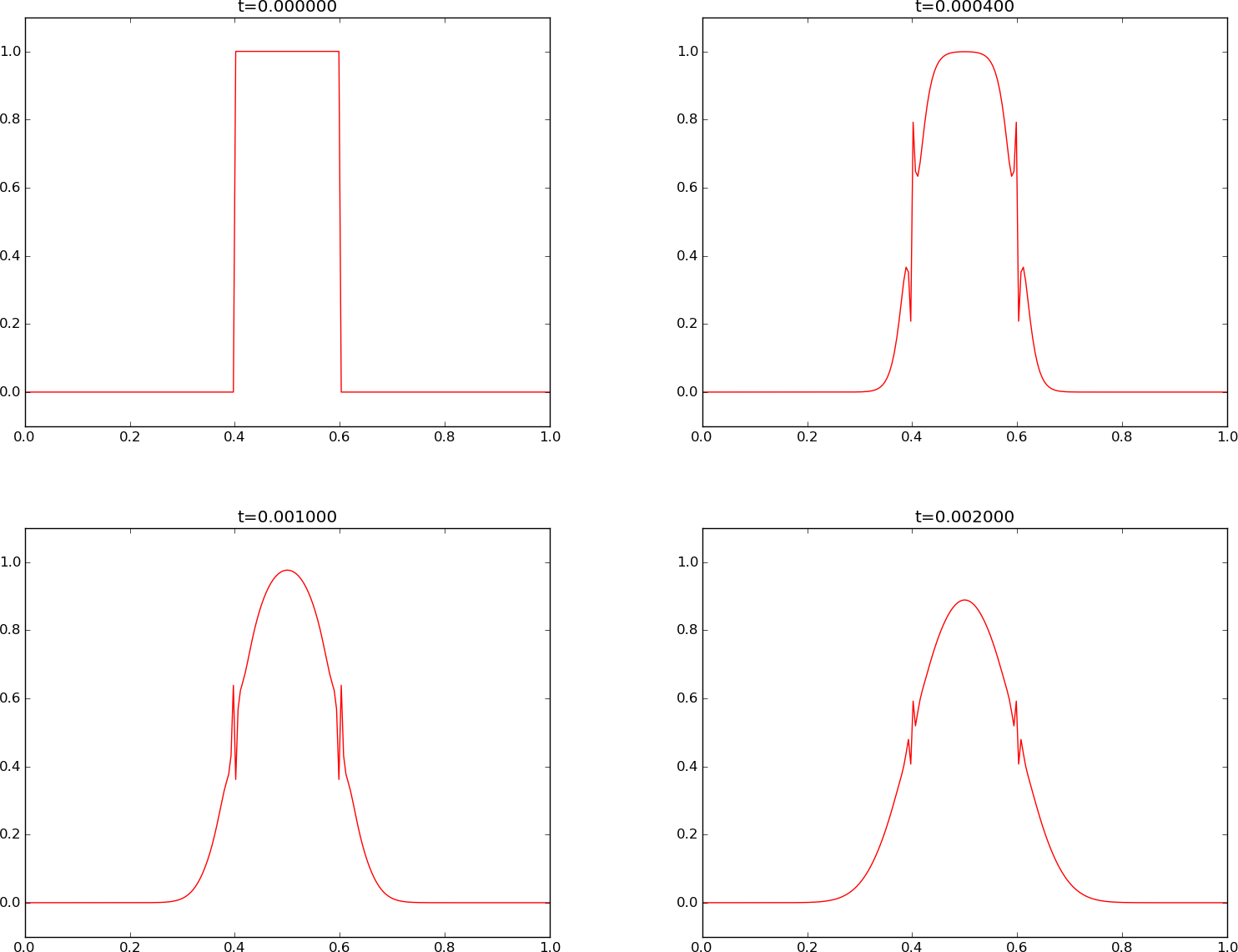

Increasing \(F\) slightly beyond the limit 0.5, to \(F=0.51\), gives growing, non-physical instabilities, as seen in Figure Forward Euler scheme for .

Forward Euler scheme for \(F=0.5\)

Forward Euler scheme for \(F=0.25\)

Forward Euler scheme for \(F=0.51\)

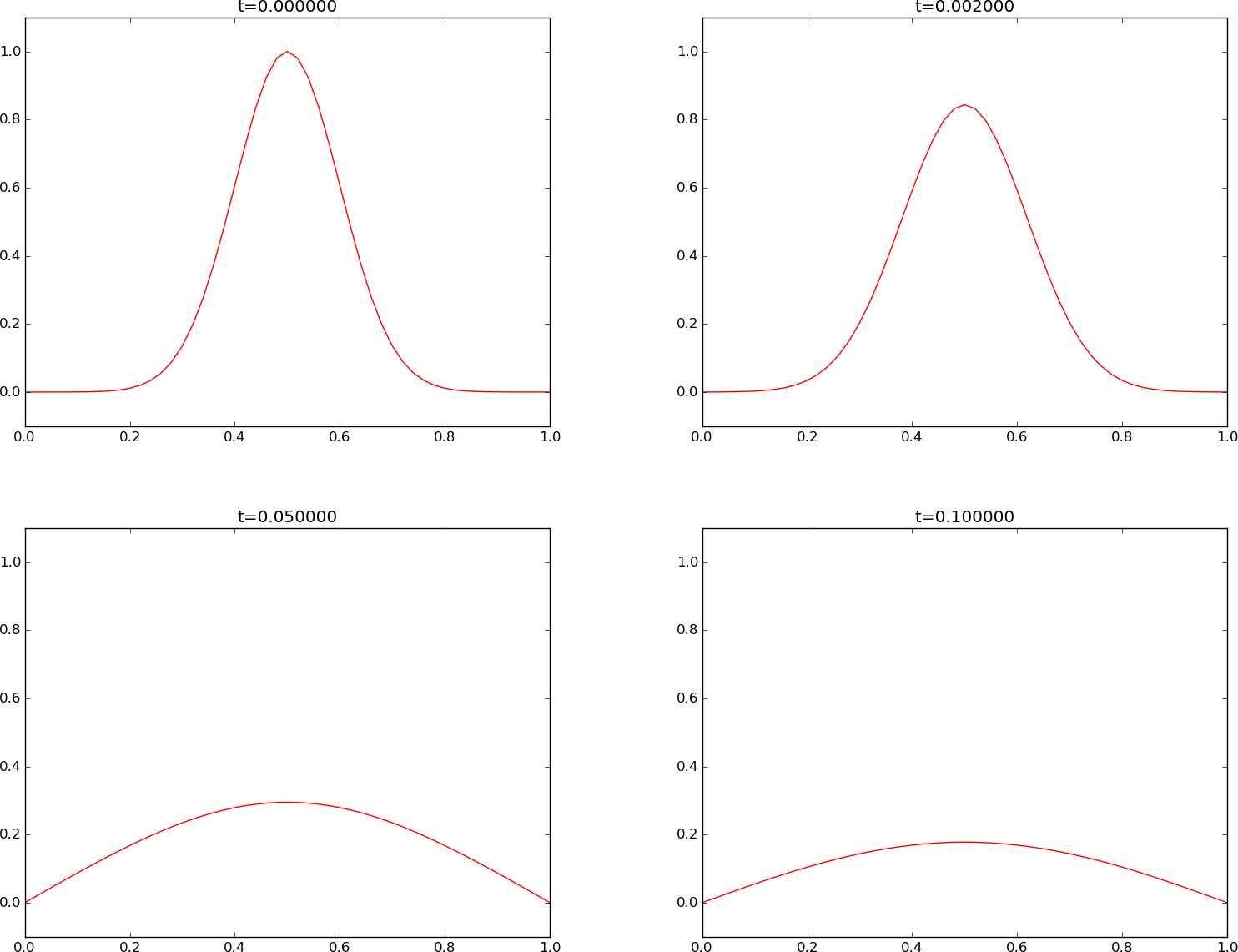

Instead of a discontinuous initial condition we now try the smooth Gaussian function for \(I(x)\). A simulation for \(F=0.5\) is shown in Figure Forward Euler scheme for . Now the numerical solution is smooth for all times, and this is true for any \(F\leq 0.5\).

Forward Euler scheme for \(F=0.5\)

Experiments with these two choices of \(I(x)\) reveal some important observations:

- The Forward Euler scheme leads to growing solutions if \(F>\frac{1}{2}\).

- \(I(x)\) as a discontinuous plug leads to a saw tooth-like noise for \(F=\frac{1}{2}\), which is absent for \(F\leq\frac{1}{4}\).

- The smooth Gaussian initial function leads to a smooth solution for all relevant \(F\) values (\(F\leq \frac{1}{2}\)).

Implicit methods for the 1D diffusion equation¶

Simulations with the Forward Euler scheme shows that the time step restriction, \(F\leq\frac{1}{2}\), which means \(\Delta t \leq \Delta x^2/(2{\alpha})\), may be relevant in the beginning of the diffusion process, when the solution changes quite fast, but as time increases, the process slows down, and a small \(\Delta t\) may be inconvenient. By using implicit schemes, which lead to coupled systems of linear equations to be solved at each time level, any size of \(\Delta t\) is possible (but the accuracy decreases with increasing \(\Delta t\)). The Backward Euler scheme, derived and implemented below, is the simplest implicit scheme for the diffusion equation.

Backward Euler scheme¶

We now apply a backward difference in time in (353), but the same central difference in space:

\[\tag{358} [D_t^- u = D_xD_x u + f]^n_i,\]

which written out reads

\[\tag{359} \frac{u^{n}_i-u^{n-1}_i}{\Delta t} = {\alpha}\frac{u^{n}_{i+1} - 2u^n_i + u^n_{i-1}}{\Delta x^2} + f_i^n{\thinspace .}\]

Now we assume \(u^{n-1}_i\) is already computed, but all quantities at the "new" time level \(n\) are unknown. This time it is not possible to solve with respect to \(u_i^{n}\) because this value couples to its neighbors in space, \(u^n_{i-1}\) and \(u^n_{i+1}\), which are also unknown. Let us examine this fact for the case when \(N_x=3\). Equation (359) written for \(i=1,\ldots,Nx-1= 1,2\) becomes

\[\tag{360} \frac{u^{n}_1-u^{n-1}_1}{\Delta t} = {\alpha}\frac{u^{n}_{2} - 2u^n_1 + u^n_{0}}{\Delta x^2} + f_1^n\]

\[\tag{361} \frac{u^{n}_2-u^{n-1}_2}{\Delta t} = {\alpha}\frac{u^{n}_{3} - 2u^n_2 + u^n_{1}}{\Delta x^2} + f_2^n\]

The boundary values \(u^n_0\) and \(u^n_3\) are known as zero. Collecting the unknown new values \(u^n_1\) and \(u^n_2\) on the left-hand side and multiplying by \(\Delta t\) gives

\[\tag{362} \left(1+ 2F\right) u^{n}_1 - F u^{n}_{2} = u^{n-1}_1 + \Delta t f_1^n,\]

\[\tag{363} - F u^{n}_{1} + \left(1+ 2F\right) u^{n}_2 = u^{n-1}_2 + \Delta t f_2^n{\thinspace .}\]

This is a coupled \(2\times 2\) system of algebraic equations for the unknowns \(u^n_1\) and \(u^n_2\). The equivalent matrix form is

\[\begin{split}\left(\begin{array}{cc} 1+ 2F & - F\\ - F & 1+ 2F \end{array}\right) \left(\begin{array}{c} u^{n}_1\\ u^{n}_2 \end{array}\right) = \left(\begin{array}{c} u^{n-1}_1 + \Delta t f_1^n\\ u^{n-1}_2 + \Delta t f_2^n \end{array}\right)\end{split}\]

Terminology: implicit vs. explicit methods

Discretization methods that lead to a coupled system of equations for the unknown function at a new time level are said to be implicit methods. The counterpart, explicit methods, refers to discretization methods where there is a simple explicit formula for the values of the unknown function at each of the spatial mesh points at the new time level. From an implementational point of view, implicit methods are more comprehensive to code since they require the solution of coupled equations, i.e., a matrix system, at each time level. With explicit methods we have a closed-form formula for the value of the unknown at each mesh point.

Very often explicit schemes have a restriction on the size of the time step that can be relaxed by using implicit schemes. In fact, implicit schemes are frequently unconditionally stable, so the size of the time step is governed by accuracy and not by stability. This is the great advantage of implicit schemes.

In the general case, (359) gives rise to a coupled \((N_x-1)\times (N_x-1)\) system of algebraic equations for all the unknown \(u^n_i\) at the interior spatial points \(i=1,\ldots,N_x-1\). Collecting the unknowns on the left-hand side, (359) can be written

\[\tag{364} - F u^n_{i-1} + \left(1+ 2F \right) u^{n}_i - F u^n_{i+1} = u_{i-1}^{n-1},\]

for \(i=1,\ldots,N_x-1\). One can either view these equations as a system for where the \(u^{n}_i\) values at the internal mesh points, \(i=1,\ldots,N_x-1\), are unknown, or we may append the boundary values \(u^n_0\) and \(u^n_{N_x}\) to the system. In the latter case, all \(u^n_i\) for \(i=0,\ldots,N_x\) are considered unknown, and we must add the boundary equations to the \(N_x-1\) equations in (364):

\[\tag{365} u_0^n = 0,\]

\[\tag{366} u_{N_x}^n = 0{\thinspace .}\]

A coupled system of algebraic equations can be written on matrix form, and this is important if we want to call up ready-made software for solving the system. The equations (364) and (365)–(366) correspond to the matrix equation

\[AU = b\]

where \(U=(u^n_0,\ldots,u^n_{N_x})\), and the matrix \(A\) has the following structure:

\[\begin{split}\tag{367} A = \left( \begin{array}{cccccccccc} A_{0,0} & A_{0,1} & 0 &\cdots & \cdots & \cdots & \cdots & \cdots & 0 \\ A_{1,0} & A_{1,1} & A_{1,2} & \ddots & & & & & \vdots \\ 0 & A_{2,1} & A_{2,2} & A_{2,3} & \ddots & & & & \vdots \\ \vdots & \ddots & & \ddots & \ddots & 0 & & & \vdots \\ \vdots & & \ddots & \ddots & \ddots & \ddots & \ddots & & \vdots \\ \vdots & & & 0 & A_{i,i-1} & A_{i,i} & A_{i,i+1} & \ddots & \vdots \\ \vdots & & & & \ddots & \ddots & \ddots &\ddots & 0 \\ \vdots & & & & &\ddots & \ddots &\ddots & A_{N_x-1,N_x} \\ 0 &\cdots & \cdots &\cdots & \cdots & \cdots & 0 & A_{N_x,N_x-1} & A_{N_x,N_x} \end{array} \right)\end{split}\]

The nonzero elements are given by

\[\tag{368} A_{i,i-1} = -F\]

\[\tag{369} A_{i,i} = 1+ 2F\]

\[\tag{370} A_{i,i+1} = -F\]

in the equations for internal points, \(i=1,\ldots,N_x-1\). The first and last equation correspond to the boundary condition, where we know the solution, and therefore we must have

\[\tag{371} A_{0,0} = 1,\]

\[\tag{372} A_{0,1} = 0,\]

\[\tag{373} A_{N_x,N_x-1} = 0,\]

\[\tag{374} A_{N_x,N_x} = 1{\thinspace .}\]

The right-hand side \(b\) is written as

\[\begin{split}\tag{375} b = \left(\begin{array}{c} b_0\\ b_1\\ \vdots\\ b_i\\ \vdots\\ b_{N_x} \end{array}\right)\end{split}\]

with

\[\tag{376} b_0 = 0,\]

\[\tag{377} b_i = u^{n-1}_i,\quad i=1,\ldots,N_x-1,\]

\[\tag{378} b_{N_x} = 0 {\thinspace .}\]

We observe that the matrix \(A\) contains quantities that do not change in time. Therefore, \(A\) can be formed once and for all before we enter the recursive formulas for the time evolution. The right-hand side \(b\), however, must be updated at each time step. This leads to the following computational algorithm, here sketched with Python code:

x = np . linspace ( 0 , L , Nx + 1 ) # mesh points in space dx = x [ 1 ] - x [ 0 ] t = np . linspace ( 0 , T , N + 1 ) # mesh points in time u = np . zeros ( Nx + 1 ) # unknown u at new time level u_n = np . zeros ( Nx + 1 ) # u at the previous time level # Data structures for the linear system A = np . zeros (( Nx + 1 , Nx + 1 )) b = np . zeros ( Nx + 1 ) for i in range ( 1 , Nx ): A [ i , i - 1 ] = - F A [ i , i + 1 ] = - F A [ i , i ] = 1 + 2 * F A [ 0 , 0 ] = A [ Nx , Nx ] = 1 # Set initial condition u(x,0) = I(x) for i in range ( 0 , Nx + 1 ): u_n [ i ] = I ( x [ i ]) import scipy.linalg for n in range ( 0 , Nt ): # Compute b and solve linear system for i in range ( 1 , Nx ): b [ i ] = - u_n [ i ] b [ 0 ] = b [ Nx ] = 0 u [:] = scipy . linalg . solve ( A , b ) # Update u_n before next step u_n [:] = u

Regarding verification, the same considerations apply as for the Forward Euler method (the section Verification).

Sparse matrix implementation¶

We have seen from (367) that the matrix \(A\) is tridiagonal. The code segment above used a full, dense matrix representation of \(A\), which stores a lot of values we know are zero beforehand, and worse, the solution algorithm computes with all these zeros. With \(N_x+1\) unknowns, the work by the solution algorithm is \(\frac{1}{3} (N_x+1)^3\) and the storage requirements \((N_x+1)^2\). By utilizing the fact that \(A\) is tridiagonal and employing corresponding software tools that work with the three diagonals, the work and storage demands can be proportional to \(N_x\) only. This leads to a dramatic improvement: with \(N_x=200\), which is a realistic resolution, the code runs about 40,000 times faster and reduces the storage to just 1.5%! It is no doubt that we should take advantage of the fact that \(A\) is tridiagonal.

The key idea is to apply a data structure for a tridiagonal or sparse matrix. The scipy.sparse package has relevant utilities. For example, we can store only the nonzero diagonals of a matrix. The package also has linear system solvers that operate on sparse matrix data structures. The code below illustrates how we can store only the main diagonal and the upper and lower diagonals.

# Representation of sparse matrix and right-hand side main = np . zeros ( Nx + 1 ) lower = np . zeros ( Nx ) upper = np . zeros ( Nx ) b = np . zeros ( Nx + 1 ) # Precompute sparse matrix main [:] = 1 + 2 * F lower [:] = - F upper [:] = - F # Insert boundary conditions main [ 0 ] = 1 main [ Nx ] = 1 A = scipy . sparse . diags ( diagonals = [ main , lower , upper ], offsets = [ 0 , - 1 , 1 ], shape = ( Nx + 1 , Nx + 1 ), format = 'csr' ) print A . todense () # Check that A is correct # Set initial condition for i in range ( 0 , Nx + 1 ): u_n [ i ] = I ( x [ i ]) for n in range ( 0 , Nt ): b = u_n b [ 0 ] = b [ - 1 ] = 0.0 # boundary conditions u [:] = scipy . sparse . linalg . spsolve ( A , b ) u_n [:] = u

The scipy.sparse.linalg.spsolve function utilizes the sparse storage structure of A and performs, in this case, a very efficient Gaussian elimination solve.

The program diffu1D_u0.py contains a function solver_BE , which implements the Backward Euler scheme sketched above. As mentioned in the section Forward Euler scheme, the functions plug and gaussian runs the case with \(I(x)\) as a discontinuous plug or a smooth Gaussian function. All experiments point to two characteristic features of the Backward Euler scheme: 1) it is always stable, and 2) it always gives a smooth, decaying solution.

Crank-Nicolson scheme¶

The idea in the Crank-Nicolson scheme is to apply centered differences in space and time, combined with an average in time. We demand the PDE to be fulfilled at the spatial mesh points, but midway between the points in the time mesh:

\[\frac{\partial}{\partial t} u(x_i, t_{n+\frac{1}{2}}) = {\alpha}\frac{\partial^2}{\partial x^2}u(x_i, t_{n+\frac{1}{2}}) + f(x_i,t_{n+\frac{1}{2}}),\]

for \(i=1,\ldots,N_x-1\) and \(n=0,\ldots, N_t-1\).

With centered differences in space and time, we get

\[[D_t u = {\alpha} D_xD_x u + f]^{n+\frac{1}{2}}_i{\thinspace .}\]

On the right-hand side we get an expression

\[\frac{1}{\Delta x^2}\left(u^{n+\frac{1}{2}}_{i-1} - 2u^{n+\frac{1}{2}}_i + u^{n+\frac{1}{2}}_{i+1}\right) + f_i^{n+\frac{1}{2}}{\thinspace .}\]

This expression is problematic since \(u^{n+\frac{1}{2}}_i\) is not one of the unknowns we compute. A possibility is to replace \(u^{n+\frac{1}{2}}_i\) by an arithmetic average:

\[u^{n+\frac{1}{2}}_i\approx \frac{1}{2}\left(u^{n}_i +u^{n+1}_{i}\right){\thinspace .}\]

In the compact notation, we can use the arithmetic average notation \(\overline{u}^t\):

\[[D_t u = {\alpha} D_xD_x \overline{u}^t + f]^{n+\frac{1}{2}}_i{\thinspace .}\]

We can also use an average for \(f_i^{n+\frac{1}{2}}\):

\[[D_t u = {\alpha} D_xD_x \overline{u}^t + \overline{f}^t]^{n+\frac{1}{2}}_i{\thinspace .}\]

After writing out the differences and average, multiplying by \(\Delta t\), and collecting all unknown terms on the left-hand side, we get

\[u^{n+1}_i - \frac{1}{2} F(u^{n+1}_{i-1} - 2u^{n+1}_i + u^{n+1}_{i+1}) = u^{n}_i + \frac{1}{2} F(u^{n}_{i-1} - 2u^{n}_i + u^{n}_{i+1})\nonumber\]

\[\tag{379} \qquad \frac{1}{2} f_i^{n+1} + \frac{1}{2} f_i^n{\thinspace .}\]

Also here, as in the Backward Euler scheme, the new unknowns \(u^{n+1}_{i-1}\), \(u^{n+1}_{i}\), and \(u^{n+1}_{i+1}\) are coupled in a linear system \(AU=b\), where \(A\) has the same structure as in (367), but with slightly different entries:

\[\tag{380} A_{i,i-1} = -\frac{1}{2} F\]

\[\tag{381} A_{i,i} = 1 + F\]

\[\tag{382} A_{i,i+1} = -\frac{1}{2} F\]

in the equations for internal points, \(i=1,\ldots,N_x-1\). The equations for the boundary points correspond to

\[\tag{383} A_{0,0} = 1,\]

\[\tag{384} A_{0,1} = 0,\]

\[\tag{385} A_{N_x,N_x-1} = 0,\]

\[\tag{386} A_{N_x,N_x} = 1{\thinspace .}\]

The right-hand side \(b\) has entries

\[\tag{387} b_0 = 0,\]

\[\tag{388} b_i = u^{n-1}_i + \frac{1}{2}(f_i^n + f_i^{n+1}),\quad i=1,\ldots,N_x-1,\]

\[\tag{389} b_{N_x} = 0 {\thinspace .}\]

When verifying some implementation of the Crank-Nicolson scheme by convergence rate testing, one should note that the scheme is second order accurate in both space and time. The numerical error then reads

\[E = C_t\Delta t^r + C_x\Delta x^r,\]

where \(r=2\) (\(C_t\) and \(C_x\) are unknown constants, as before). When introducing a single discretization parameter, we may now simply choose

\[h = \Delta x = \Delta t,\]

which gives

\[E = C_th^r + C_xh^r = (C_t + C_x)h^r,\]

where \(r\) should approach 2 as resolution is increased in the convergence rate computations.

The unifying \(\theta\) rule¶

For the equation

\[\frac{\partial u}{\partial t} = G(u),\]

where \(G(u)\) is some spatial differential operator, the \(\theta\)-rule looks like

\[\frac{u^{n+1}_i - u^n_i}{\Delta t} = \theta G(u^{n+1}_i) + (1-\theta) G(u^{n}_i){\thinspace .}\]

The important feature of this time discretization scheme is that we can implement one formula and then generate a family of well-known and widely used schemes:

- \(\theta=0\) gives the Forward Euler scheme in time

- \(\theta=1\) gives the Backward Euler scheme in time

- \(\theta=\frac{1}{2}\) gives the Crank-Nicolson scheme in time

In the compact difference notation, we write the \(\theta\) rule as

\[[D_t u = {\alpha} D_xD_x u]^{n+\theta}{\thinspace .}\]

We have that \(t_{n+\theta} = \theta t_{n+1} + (1-\theta)t_n\).

Applied to the 1D diffusion problem, the \(\theta\)-rule gives

\[\begin{split}\begin{align*} \frac{u^{n+1}_i-u^n_i}{\Delta t} &= {\alpha}\left( \theta \frac{u^{n+1}_{i+1} - 2u^{n+1}_i + u^{n+1}_{i-1}}{\Delta x^2} + (1-\theta) \frac{u^{n}_{i+1} - 2u^n_i + u^n_{i-1}}{\Delta x^2}\right)\\ &\qquad + \theta f_i^{n+1} + (1-\theta)f_i^n {\thinspace .} \end{align*}\end{split}\]

This scheme also leads to a matrix system with entries

\[A_{i,i-1} = -F\theta,\quad A_{i,i} = 1+2F\theta\quad, A_{i,i+1} = -F\theta,\]

while right-hand side entry \(b_i\) is

\[b_i = u^n_{i} + F(1-\theta) \frac{u^{n}_{i+1} - 2u^n_i + u^n_{i-1}}{\Delta x^2} + \Delta t\theta f_i^{n+1} + \Delta t(1-\theta)f_i^n{\thinspace .}\]

The corresponding entries for the boundary points are as in the Backward Euler and Crank-Nicolson schemes listed earlier.

Note that convergence rate testing with implementations of the theta rule must adjust the error expression according to which of the underlying schemes is actually being run. That is, if \(\theta=0\) (i.e., Forward Euler) or \(\theta=1\) (i.e., Backward Euler), there should be first order convergence, whereas with \(\theta=0.5\) (i.e., Crank-Nicolson), one should get second order convergence (as outlined in previous sections).

Experiments¶

We can repeat the experiments from the section Numerical experiments to see if the Backward Euler or Crank-Nicolson schemes have problems with sawtooth-like noise when starting with a discontinuous initial condition. We can also verify that we can have \(F>\frac{1}{2}\), which allows larger time steps than in the Forward Euler method.

Backward Euler scheme for \(F=0.5\)

The Backward Euler scheme always produces smooth solutions for any \(F\). Figure Backward Euler scheme for shows one example. Note that the mathematical discontinuity at \(t=0\) leads to a linear variation on a mesh, but the approximation to a jump becomes better as \(N_x\) increases. In our simulation we specify \(\Delta t\) and \(F\), and \(N_x\) is set to \(L/\sqrt{{\alpha}\Delta t/F}\). Since \(N_x\sim\sqrt{F}\), the discontinuity looks sharper in the Crank-Nicolson simulations with larger \(F\).

The Crank-Nicolson method produces smooth solutions for small \(F\), \(F\leq\frac{1}{2}\), but small noise gets more and more evident as \(F\) increases. Figures Crank-Nicolson scheme for and Crank-Nicolson scheme for demonstrate the effect for \(F=3\) and \(F=10\), respectively. The section Analysis of schemes for the diffusion equation explains why such noise occur.

Crank-Nicolson scheme for \(F=3\)

Crank-Nicolson scheme for \(F=10\)

The Laplace and Poisson equation¶

The Laplace equation, \(\nabla^2 u = 0\), and the Poisson equation, \(-\nabla^2 u = f\), occur in numerous applications throughout science and engineering. In 1D these equations read \(u''(x)=0\) and \(-u''(x)=f(x)\), respectively. We can solve 1D variants of the Laplace equations with the listed software, because we can interpret \(u_{xx}=0\) as the limiting solution of \(u_t = {\alpha} u_{xx}\) when \(u\) reaches a steady state limit where \(u_t\rightarrow 0\). Similarly, Poisson's equation \(-u_{xx}=f\) arises from solving \(u_t = u_{xx} + f\) and letting \(t\rightarrow\infty\) so \(u_t\rightarrow 0\).

Technically in a program, we can simulate \(t\rightarrow\infty\) by just taking one large time step: \(\Delta t\rightarrow\infty\). In the limit, the Backward Euler scheme gives

\[-\frac{u^{n+1}_{i+1} - 2u^{n+1}_i + u^{n+1}_{i-1}}{\Delta x^2} = f^{n+1}_i,\]

which is nothing but the discretization \([-D_xD_x u = f]^{n+1}_i=0\) of \(-u_{xx}=f\).

The result above means that the Backward Euler scheme can solve the limit equation directly and hence produce a solution of the 1D Laplace equation. With the Forward Euler scheme we must do the time stepping since \(\Delta t > \Delta x^2/{\alpha}\) is illegal and leads to instability. We may interpret this time stepping as solving the equation system from \(-u_{xx}=f\) by iterating on a pseudo time variable.

Analysis of schemes for the diffusion equation¶

The numerical experiments in the sections Numerical experiments and Experiments reveal that there are some numerical problems with the Forward Euler and Crank-Nicolson schemes: sawtooth-like noise is sometimes present in solutions that are, from a mathematical point of view, expected to be smooth. This section presents a mathematical analysis that explains the observed behavior and arrives at criteria for obtaining numerical solutions that reproduce the qualitative properties of the exact solutions. In short, we shall explain what is observed in Figures Forward Euler scheme for -Crank-Nicolson scheme for .

Properties of the solution¶

A particular characteristic of diffusive processes, governed by an equation like

\[\tag{390} u_t = {\alpha} u_{xx},\]

is that the initial shape \(u(x,0)=I(x)\) spreads out in space with time, along with a decaying amplitude. Three different examples will illustrate the spreading of \(u\) in space and the decay in time.

Similarity solution¶

The diffusion equation (390) admits solutions that depend on \(\eta = (x-c)/\sqrt{4{\alpha} t}\) for a given value of \(c\). One particular solution is

\[\tag{391} u(x,t) = a\,\mbox{erf}(\eta) + b,\]

where

\[\tag{392} \mbox{erf}(\eta) = \frac{2}{\sqrt{\pi}}\int_0^\eta e^{-\zeta^2}d\zeta,\]

is the error function, and \(a\) and \(b\) are arbitrary constants. The error function lies in \((-1,1)\), is odd around \(\eta =0\), and goes relatively quickly to \(\pm 1\):

\[\begin{split}\begin{align*} \lim_{\eta\rightarrow -\infty}\mbox{erf}(\eta) &=-1,\\ \lim_{\eta\rightarrow \infty}\mbox{erf}(\eta) &=1,\\ \mbox{erf}(\eta) &= -\mbox{erf}(-\eta),\\ \mbox{erf}(0) &=0,\\ \mbox{erf}(2) &=0.99532227,\\ \mbox{erf}(3) &=0.99997791 {\thinspace .} \end{align*}\end{split}\]

As \(t\rightarrow 0\), the error function approaches a step function centered at \(x=c\). For a diffusion problem posed on the unit interval \([0,1]\), we may choose the step at \(x=1/2\) (meaning \(c=1/2\)), \(a=-1/2\), \(b=1/2\). Then

\[\tag{393} u(x,t) = \frac{1}{2}\left(1 - \mbox{erf}\left(\frac{x-\frac{1}{2}}{\sqrt{4{\alpha} t}}\right)\right) = \frac{1}{2}\mbox{erfc}\left(\frac{x-\frac{1}{2}}{\sqrt{4{\alpha} t}}\right),\]

where we have introduced the complementary error function \(\mbox{erfc}(\eta) = 1-\mbox{erf}(\eta)\). The solution (393) implies the boundary conditions

\[\tag{394} u(0,t) = \frac{1}{2}\left(1 - \mbox{erf}\left(\frac{-1/2}{\sqrt{4{\alpha} t}}\right)\right),\]

\[ \begin{align}\begin{aligned}\tag{395} u(1,t) = \frac{1}{2}\left(1 - \mbox{erf}\left(\frac{1/2}{\sqrt{4{\alpha} t}}\right)\right)\\ {\thinspace .}\end{aligned}\end{align} \]

For small enough \(t\), \(u(0,t)\approx 1\) and \(u(1,t)\approx 1\), but as \(t\rightarrow\infty\), \(u(x,t)\rightarrow 1/2\) on \([0,1]\).

Solution for a Gaussian pulse¶

The standard diffusion equation \(u_t = {\alpha} u_{xx}\) admits a Gaussian function as solution:

\[ \begin{align}\begin{aligned}\tag{396} u(x,t) = \frac{1}{\sqrt{4\pi{\alpha} t}} \exp{\left({-\frac{(x-c)^2}{4{\alpha} t}}\right)}\\ {\thinspace .}\end{aligned}\end{align} \]

At \(t=0\) this is a Dirac delta function, so for computational purposes one must start to view the solution at some time \(t=t_\epsilon>0\). Replacing \(t\) by \(t_\epsilon +t\) in (396) makes it easy to operate with a (new) \(t\) that starts at \(t=0\) with an initial condition with a finite width. The important feature of (396) is that the standard deviation \(\sigma\) of a sharp initial Gaussian pulse increases in time according to \(\sigma = \sqrt{2{\alpha} t}\), making the pulse diffuse and flatten out.

Solution for a sine component¶

Also, (390) admits a solution of the form

\[ \begin{align}\begin{aligned}\tag{397} u(x,t) = Qe^{-at}\sin\left( kx\right)\\ {\thinspace .}\end{aligned}\end{align} \]

The parameters \(Q\) and \(k\) can be freely chosen, while inserting (397) in (390) gives the constraint

\[a = -{\alpha} k^2 {\thinspace .}\]

A very important feature is that the initial shape \(I(x)=Q\sin kx\) undergoes a damping \(\exp{(-{\alpha} k^2t)}\), meaning that rapid oscillations in space, corresponding to large \(k\), are very much faster dampened than slow oscillations in space, corresponding to small \(k\). This feature leads to a smoothing of the initial condition with time. (In fact, one can use a few steps of the diffusion equation as a method for removing noise in signal processing.) To judge how good a numerical method is, we may look at its ability to smoothen or dampen the solution in the same way as the PDE does.

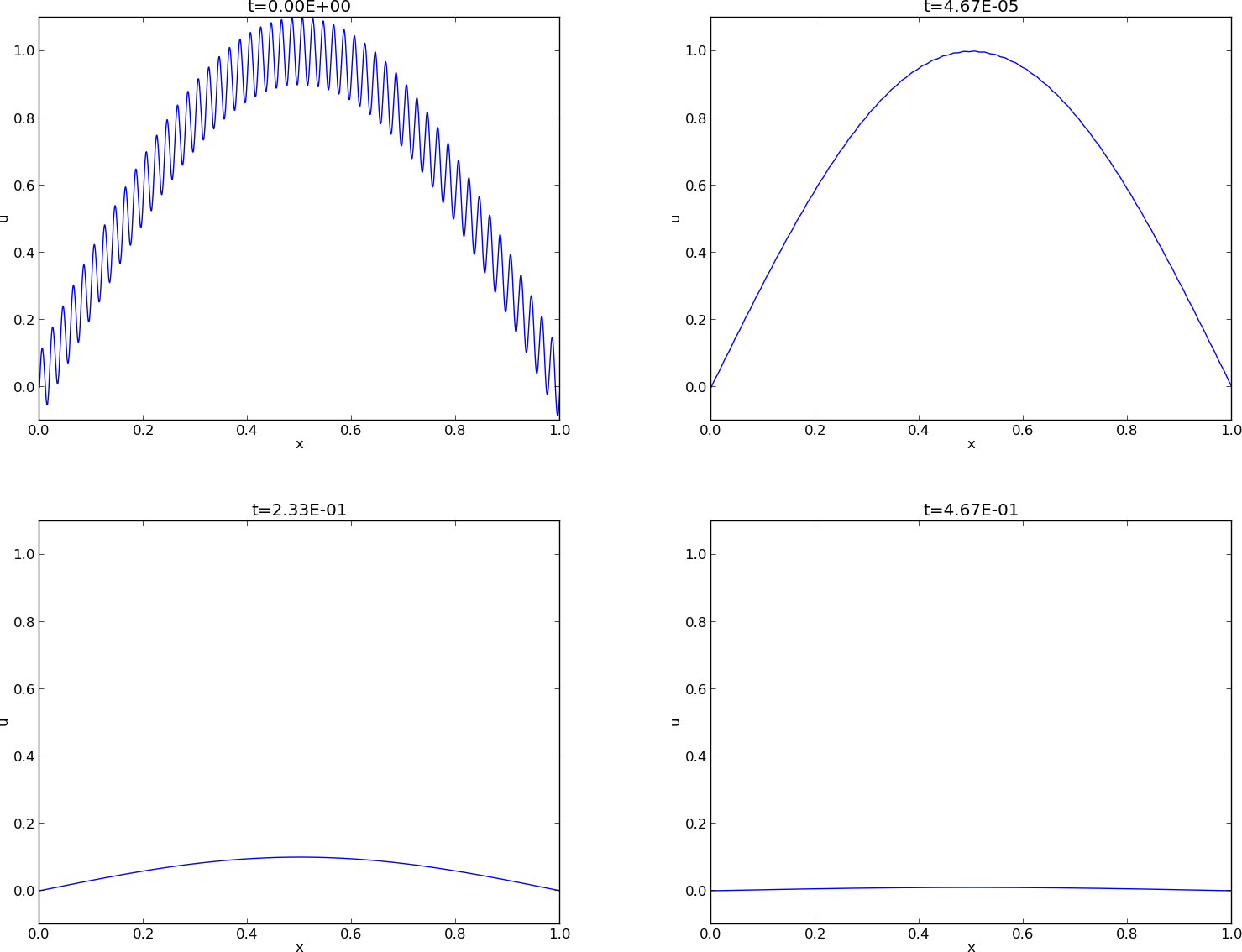

The following example illustrates the damping properties of (397). We consider the specific problem

\[\begin{split}\begin{align*} u_t &= u_{xx},\quad x\in (0,1),\ t\in (0,T],\\ u(0,t) &= u(1,t) = 0,\quad t\in (0,T],\\ u(x,0) & = \sin (\pi x) + 0.1\sin(100\pi x) {\thinspace .} \end{align*}\end{split}\]

The initial condition has been chosen such that adding two solutions like (397) constructs an analytical solution to the problem:

\[ \begin{align}\begin{aligned}\tag{398} u(x,t) = e^{-\pi^2 t}\sin (\pi x) + 0.1e^{-\pi^2 10^4 t}\sin (100\pi x)\\ {\thinspace .}\end{aligned}\end{align} \]

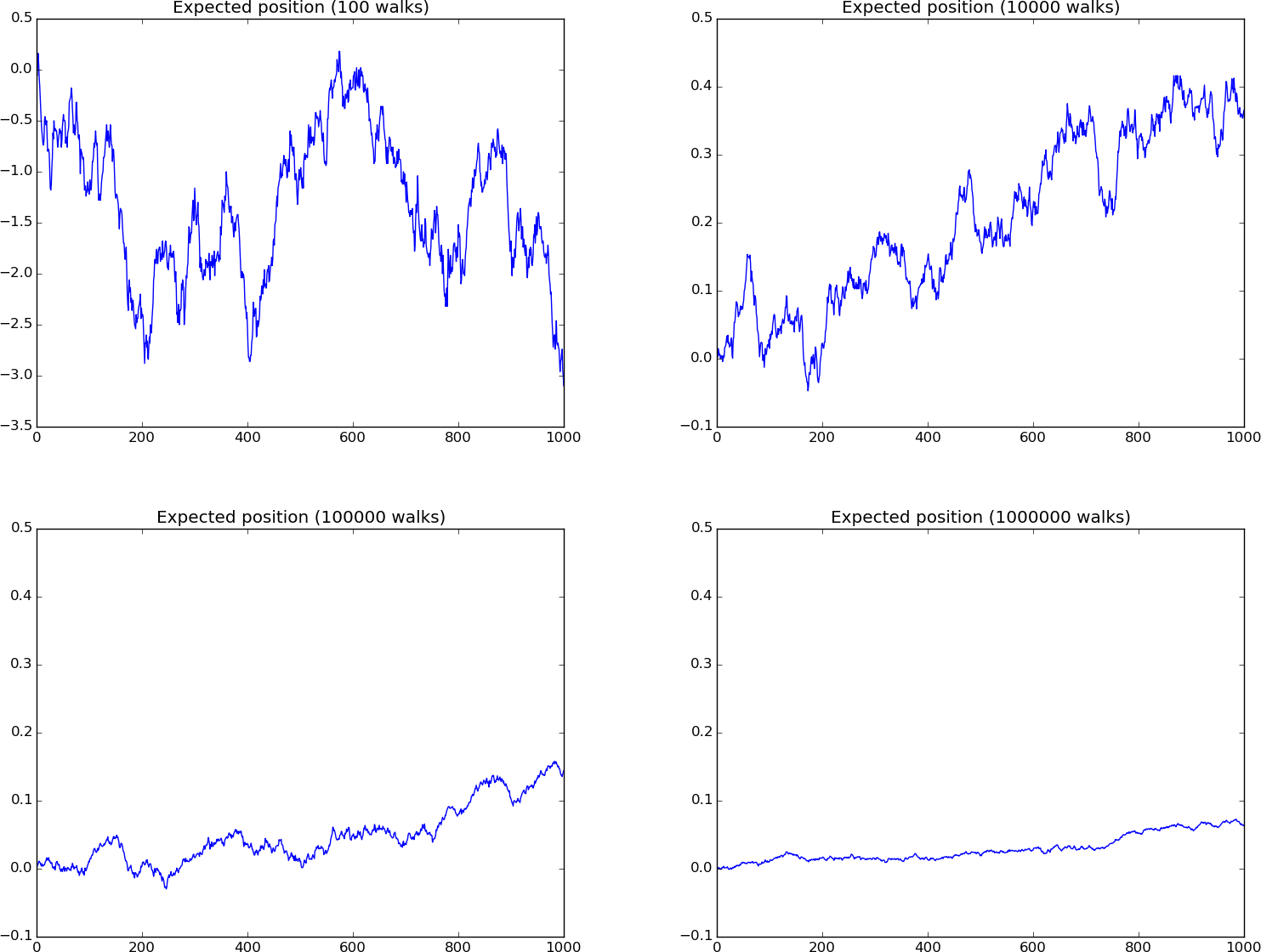

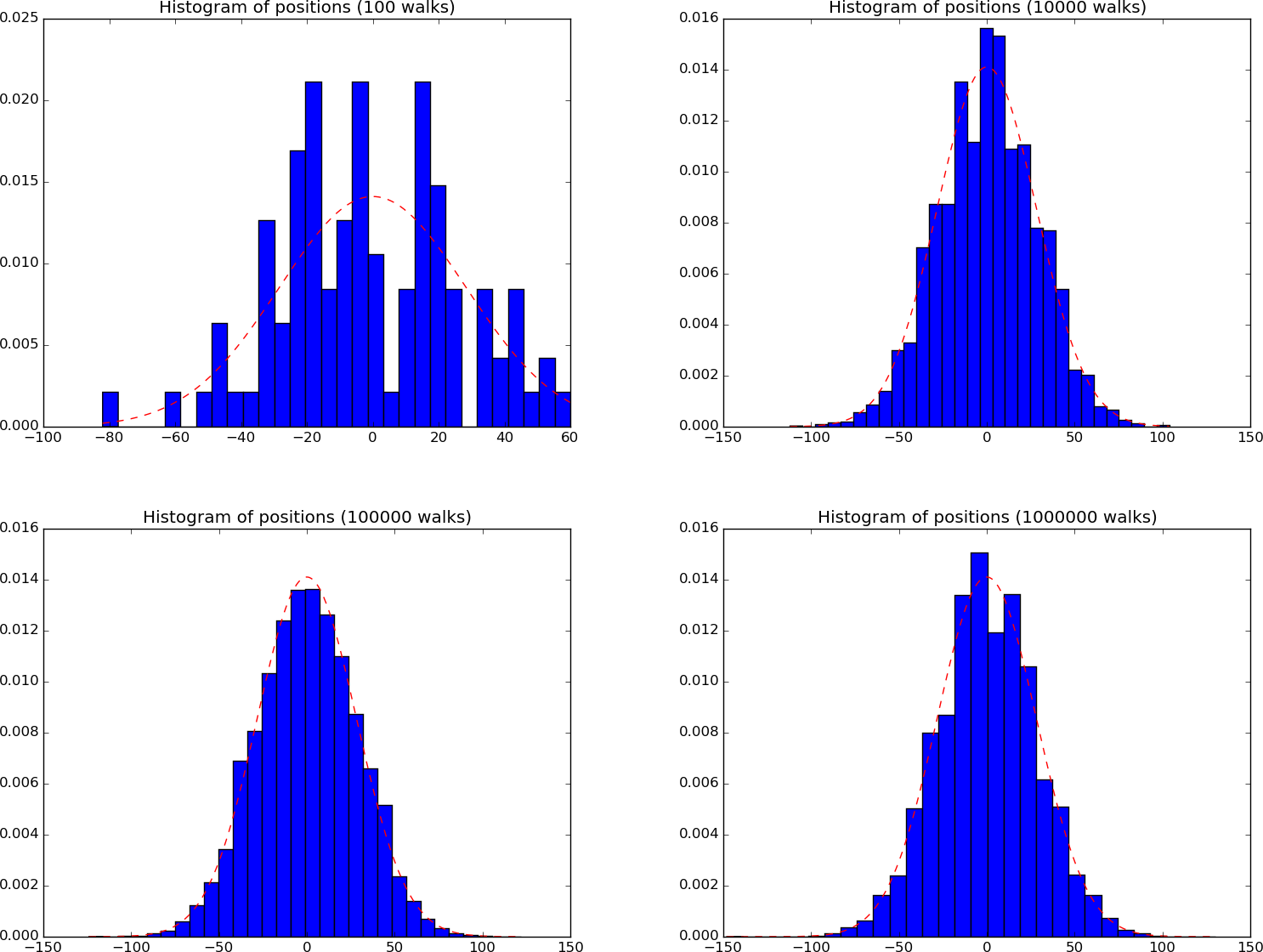

Figure Evolution of the solution of a diffusion problem: initial condition (upper left), 1/100 reduction of the small waves (upper right), 1/10 reduction of the long wave (lower left), and 1/100 reduction of the long wave (lower right) illustrates the rapid damping of rapid oscillations \(\sin (100\pi x)\) and the very much slower damping of the slowly varying \(\sin (\pi x)\) term. After about \(t=0.5\cdot10^{-4}\) the rapid oscillations do not have a visible amplitude, while we have to wait until \(t\sim 0.5\) before the amplitude of the long wave \(\sin (\pi x)\) becomes very small.

Evolution of the solution of a diffusion problem: initial condition (upper left), 1/100 reduction of the small waves (upper right), 1/10 reduction of the long wave (lower left), and 1/100 reduction of the long wave (lower right)

Analysis of discrete equations¶

A counterpart to (397) is the complex representation of the same function:

\[u(x,t) = Qe^{-at}e^{ikx},\]

where \(i=\sqrt{-1}\) is the imaginary unit. We can add such functions, often referred to as wave components, to make a Fourier representation of a general solution of the diffusion equation:

\[\tag{399} u(x,t) \approx \sum_{k\in K} b_k e^{-{\alpha} k^2t}e^{ikx},\]

where \(K\) is a set of an infinite number of \(k\) values needed to construct the solution. In practice, however, the series is truncated and \(K\) is a finite set of \(k\) values needed to build a good approximate solution. Note that (398) is a special case of (399) where \(K=\{\pi, 100\pi\}\), \(b_{\pi}=1\), and \(b_{100\pi}=0.1\).

The amplitudes \(b_k\) of the individual Fourier waves must be determined from the initial condition. At \(t=0\) we have \(u\approx\sum_kb_k\exp{(ikx)}\) and find \(K\) and \(b_k\) such that

\[\tag{400} I(x) \approx \sum_{k\in K} b_k e^{ikx}{\thinspace .}\]

(The relevant formulas for \(b_k\) come from Fourier analysis, or equivalently, a least-squares method for approximating \(I(x)\) in a function space with basis \(\exp{(ikx)}\).)

Much insight about the behavior of numerical methods can be obtained by investigating how a wave component \(\exp{(-{\alpha} k^2 t)}\exp{(ikx)}\) is treated by the numerical scheme. It appears that such wave components are also solutions of the schemes, but the damping factor \(\exp{(-{\alpha} k^2 t)}\) varies among the schemes. To ease the forthcoming algebra, we write the damping factor as \(A^n\). The exact amplification factor corresponding to \(A\) is \({A_{\small\mbox{e}}} = \exp{(-{\alpha} k^2\Delta t)}\).

Analysis of the finite difference schemes¶

We have seen that a general solution of the diffusion equation can be built as a linear combination of basic components

\[e^{-{\alpha} k^2t}e^{ikx} {\thinspace .}\]

A fundamental question is whether such components are also solutions of the finite difference schemes. This is indeed the case, but the amplitude \(\exp{(-{\alpha} k^2t)}\) might be modified (which also happens when solving the ODE counterpart \(u'=-{\alpha} u\)). We therefore look for numerical solutions of the form

\[\tag{401} u^n_q = A^n e^{ikq\Delta x} = A^ne^{ikx},\]

where the amplification factor \(A\) must be determined by inserting the component into an actual scheme. Note that \(A^n\) means \(A\) raised to the power of \(n\), \(n\) being the index in the time mesh, while the superscript \(n\) in \(u^n_q\) just denotes \(u\) at time \(t_n\).

Stability¶

The exact amplification factor is \({A_{\small\mbox{e}}}=\exp{(-{\alpha}^2 k^2\Delta t)}\). We should therefore require \(|A| < 1\) to have a decaying numerical solution as well. If \(-1\leq A<0\), \(A^n\) will change sign from time level to time level, and we get stable, non-physical oscillations in the numerical solutions that are not present in the exact solution.

Accuracy¶

To determine how accurately a finite difference scheme treats one wave component (401), we see that the basic deviation from the exact solution is reflected in how well \(A^n\) approximates \({A_{\small\mbox{e}}}^n\), or how well \(A\) approximates \({A_{\small\mbox{e}}}\). We can plot \({A_{\small\mbox{e}}}\) and the various expressions for \(A\), and we can make Taylor expansions of \(A/{A_{\small\mbox{e}}}\) to see the error more analytically.

Truncation error¶

As an alternative to examining the accuracy of the damping of a wave component, we can perform a general truncation error analysis as explained in Appendix: Truncation error analysis. Such results are more general, but less detailed than what we get from the wave component analysis. The truncation error can almost always be computed and represents the error in the numerical model when the exact solution is substituted into the equations. In particular, the truncation error analysis tells the order of the scheme, which is of fundamental importance when verifying codes based on empirical estimation of convergence rates.

Analysis of the Forward Euler scheme¶

The Forward Euler finite difference scheme for \(u_t = {\alpha} u_{xx}\) can be written as

\[[D_t^+ u = {\alpha} D_xD_x u]^n_q{\thinspace .}\]

Inserting a wave component (401) in the scheme demands calculating the terms

\[e^{ikq\Delta x}[D_t^+ A]^n = e^{ikq\Delta x}A^n\frac{A-1}{\Delta t},\]

and

\[A^nD_xD_x [e^{ikx}]_q = A^n\left( - e^{ikq\Delta x}\frac{4}{\Delta x^2} \sin^2\left(\frac{k\Delta x}{2}\right)\right) {\thinspace .}\]

Inserting these terms in the discrete equation and dividing by \(A^n e^{ikq\Delta x}\) leads to

\[\frac{A-1}{\Delta t} = -{\alpha} \frac{4}{\Delta x^2}\sin^2\left( \frac{k\Delta x}{2}\right),\]

and consequently

\[\tag{402} A = 1 -4F\sin^2 p\]

where

\[\tag{403} F = \frac{{\alpha}\Delta t}{\Delta x^2}\]

is the numerical Fourier number, and \(p=k\Delta x/2\). The complete numerical solution is then

\[\tag{404} u^n_q = \left(1 -4F\sin^2 p\right)^ne^{ikq\Delta x} {\thinspace .}\]

Stability¶

We easily see that \(A\leq 1\). However, the \(A\) can be less than \(-1\), which will lead to growth of a numerical wave component. The criterion \(A\geq -1\) implies

\[4F\sin^2 (p/2)\leq 2 {\thinspace .}\]

The worst case is when \(\sin^2 (p/2)=1\), so a sufficient criterion for stability is

\[\tag{405} F\leq {\frac{1}{2}},\]

or expressed as a condition on \(\Delta t\):

\[\tag{406} \Delta t\leq \frac{\Delta x^2}{2{\alpha}}{\thinspace .}\]

Note that halving the spatial mesh size, \(\Delta x \rightarrow {\frac{1}{2}} \Delta x\), requires \(\Delta t\) to be reduced by a factor of \(1/4\). The method hence becomes very expensive for fine spatial meshes.

Accuracy¶

Since \(A\) is expressed in terms of \(F\) and the parameter we now call \(p=k\Delta x/2\), we should also express \({A_{\small\mbox{e}}}\) by \(F\) and \(p\). The exponent in \({A_{\small\mbox{e}}}\) is \(-{\alpha} k^2\Delta t\), which equals \(-F k^2\Delta x^2=-F4p^2\). Consequently,

\[{A_{\small\mbox{e}}} = \exp{(-{\alpha} k^2\Delta t)} = \exp{(-4Fp^2)} {\thinspace .}\]

All our \(A\) expressions as well as \({A_{\small\mbox{e}}}\) are now functions of the two dimensionless parameters \(F\) and \(p\).

Computing the Taylor series expansion of \(A/{A_{\small\mbox{e}}}\) in terms of \(F\) can easily be done with aid of sympy :

def A_exact ( F , p ): return exp ( - 4 * F * p ** 2 ) def A_FE ( F , p ): return 1 - 4 * F * sin ( p ) ** 2 from sympy import * F , p = symbols ( 'F p' ) A_err_FE = A_FE ( F , p ) / A_exact ( F , p ) print A_err_FE . series ( F , 0 , 6 )

The result is

\[\frac{A}{{A_{\small\mbox{e}}}} = 1 - 4 F \sin^{2}p + 2F p^{2} - 16F^{2} p^{2} \sin^{2}p + 8 F^{2} p^{4} + \cdots\]

Recalling that \(F={\alpha}\Delta t/\Delta x^2\), \(p=k\Delta x/2\), and that \(\sin^2p\leq 1\), we realize that the dominating terms in \(A/{A_{\small\mbox{e}}}\) are at most

\[1 - 4{\alpha} \frac{\Delta t}{\Delta x^2} + {\alpha}\Delta t - 4{\alpha}^2\Delta t^2 + {\alpha}^2 \Delta t^2\Delta x^2 + \cdots {\thinspace .}\]

Analysis of the Backward Euler scheme¶

Discretizing \(u_t = {\alpha} u_{xx}\) by a Backward Euler scheme,

\[[D_t^- u = {\alpha} D_xD_x u]^n_q,\]

and inserting a wave component (401), leads to calculations similar to those arising from the Forward Euler scheme, but since

\[e^{ikq\Delta x}[D_t^- A]^n = A^ne^{ikq\Delta x}\frac{1 - A^{-1}}{\Delta t},\]

we get

\[\frac{1-A^{-1}}{\Delta t} = -{\alpha} \frac{4}{\Delta x^2}\sin^2\left( \frac{k\Delta x}{2}\right),\]

and then

\[ \begin{align}\begin{aligned}\tag{407} A = \left(1 + 4F\sin^2p\right)^{-1}\\ {\thinspace .}\end{aligned}\end{align} \]

The complete numerical solution can be written

\[\tag{408} u^n_q = \left(1 + 4F\sin^2 p\right)^{-n} e^{ikq\Delta x} {\thinspace .}\]

Stability¶

We see from (407) that \(0<A<1\), which means that all numerical wave components are stable and non-oscillatory for any \(\Delta t >0\).

Truncation error¶

The derivation of the truncation error for the Backward Euler scheme is almost identical to that for the Forward Euler scheme. We end up with

\[R^n_i = {\mathcal{O}(\Delta t)} + {\mathcal{O}(\Delta x^2)}{\thinspace .}\]

Analysis of the Crank-Nicolson scheme¶

The Crank-Nicolson scheme can be written as

\[[D_t u = {\alpha} D_xD_x \overline{u}^x]^{n+\frac{1}{2}}_q,\]

or

\[[D_t u]^{n+\frac{1}{2}}_q = \frac{1}{2}{\alpha}\left( [D_xD_x u]^{n}_q + [D_xD_x u]^{n+1}_q\right) {\thinspace .}\]

Inserting (401) in the time derivative approximation leads to

\[[D_t A^n e^{ikq\Delta x}]^{n+\frac{1}{2}} = A^{n+\frac{1}{2}} e^{ikq\Delta x}\frac{A^{\frac{1}{2}}-A^{-\frac{1}{2}}}{\Delta t} = A^ne^{ikq\Delta x}\frac{A-1}{\Delta t} {\thinspace .}\]

Inserting (401) in the other terms and dividing by \(A^ne^{ikq\Delta x}\) gives the relation

\[\frac{A-1}{\Delta t} = -\frac{1}{2}{\alpha}\frac{4}{\Delta x^2} \sin^2\left(\frac{k\Delta x}{2}\right) (1 + A),\]

and after some more algebra,

\[\tag{409} A = \frac{ 1 - 2F\sin^2p}{1 + 2F\sin^2p} {\thinspace .}\]

The exact numerical solution is hence

\[\tag{410} u^n_q = \left(\frac{ 1 - 2F\sin^2p}{1 + 2F\sin^2p}\right)^ne^{ikp\Delta x} {\thinspace .}\]

Stability¶

The criteria \(A>-1\) and \(A<1\) are fulfilled for any \(\Delta t >0\). Therefore, the solution cannot grow, but it will oscillate if \(1-2F\sin^p < 0\). To avoid such non-physical oscillations, we must demand \(F\leq\frac{1}{2}\).

Truncation error¶

- The truncation error is derived in

- Linear diffusion equation in 1D:

\[R^{n+\frac{1}{2}}_i = {\mathcal{O}(\Delta x^2)} + {\mathcal{O}(\Delta t^2)}{\thinspace .}\]

Analysis of the Leapfrog scheme¶

An attractive feature of the Forward Euler scheme is the explicit time stepping and no need for solving linear systems. However, the accuracy in time is only \({\mathcal{O}(\Delta t)}\). We can get an explicit second-order scheme in time by using the Leapfrog method:

\[[D_{2t} u = {\alpha} D_xDx u + f]^n_i{\thinspace .}\]

Written out,

\[u^{n+1} = u^{n-1} + \frac{2{\alpha}\Delta t}{\Delta x^2} (u^{n}_{i+1} - 2u^n_i + u^n_{i-1}) + f(x_i,t_n){\thinspace .}\]

We need some formula for the first step, \(u^1_i\), but for that we can use a Forward Euler step.

Unfortunately, the Leapfrog scheme is always unstable for the diffusion equation. To see this, we insert a wave component \(A^ne^{ikx}\) and get

\[\frac{A - A^{-1}}{\Delta t} = -{\alpha} \frac{4}{\Delta x^2}\sin^2 p,\]

or

\[A^2 + 4F \sin^2 p\, A - 1 = 0,\]

which has roots

\[A = -2F\sin^2 p \pm \sqrt{4F^2\sin^4 p + 1}{\thinspace .}\]

Both roots have \(|A|>1\) so the always amplitude grows, which is not in accordance with physics of the problem. However, for a PDE with a first-order derivative in space, instead of a second-order one, the Leapfrog scheme performs very well. Details are provided in the section Leapfrog in time, centered differences in space.

Summary of accuracy of amplification factors¶

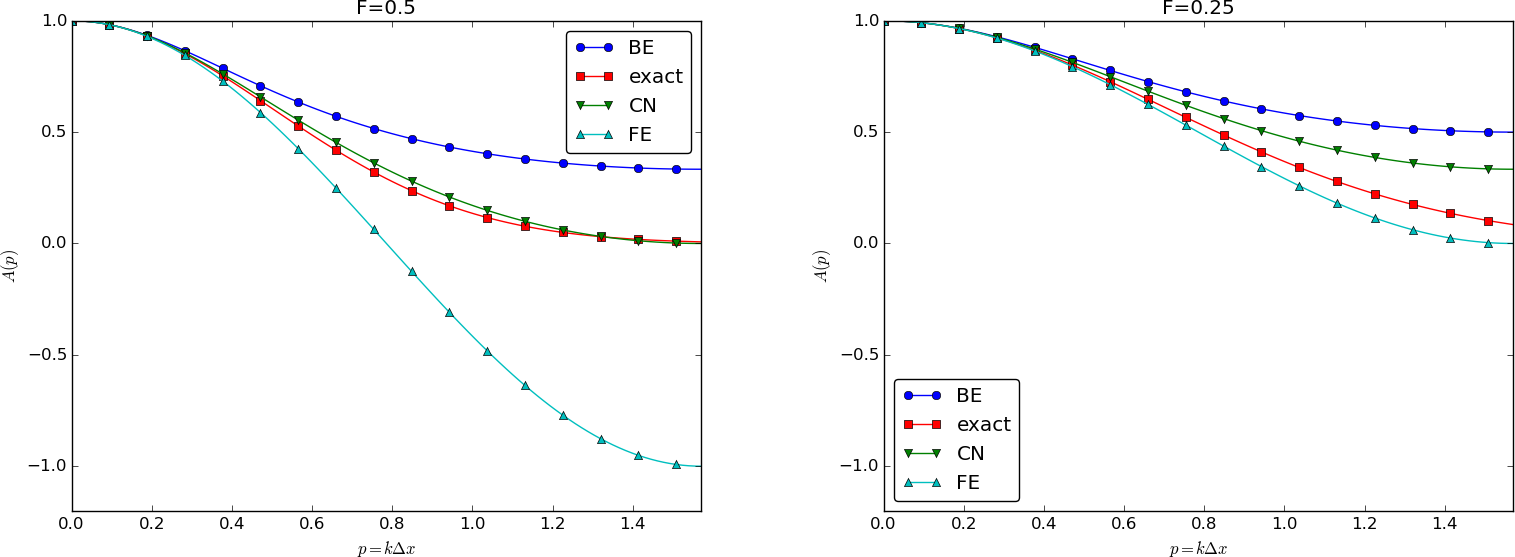

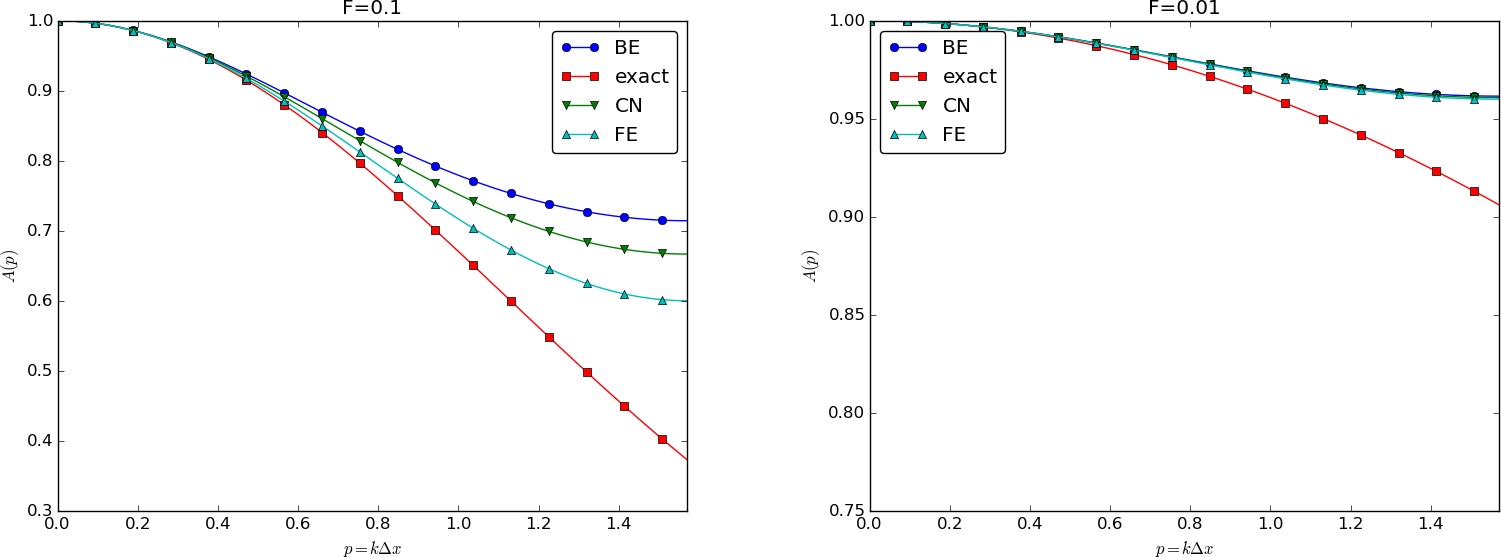

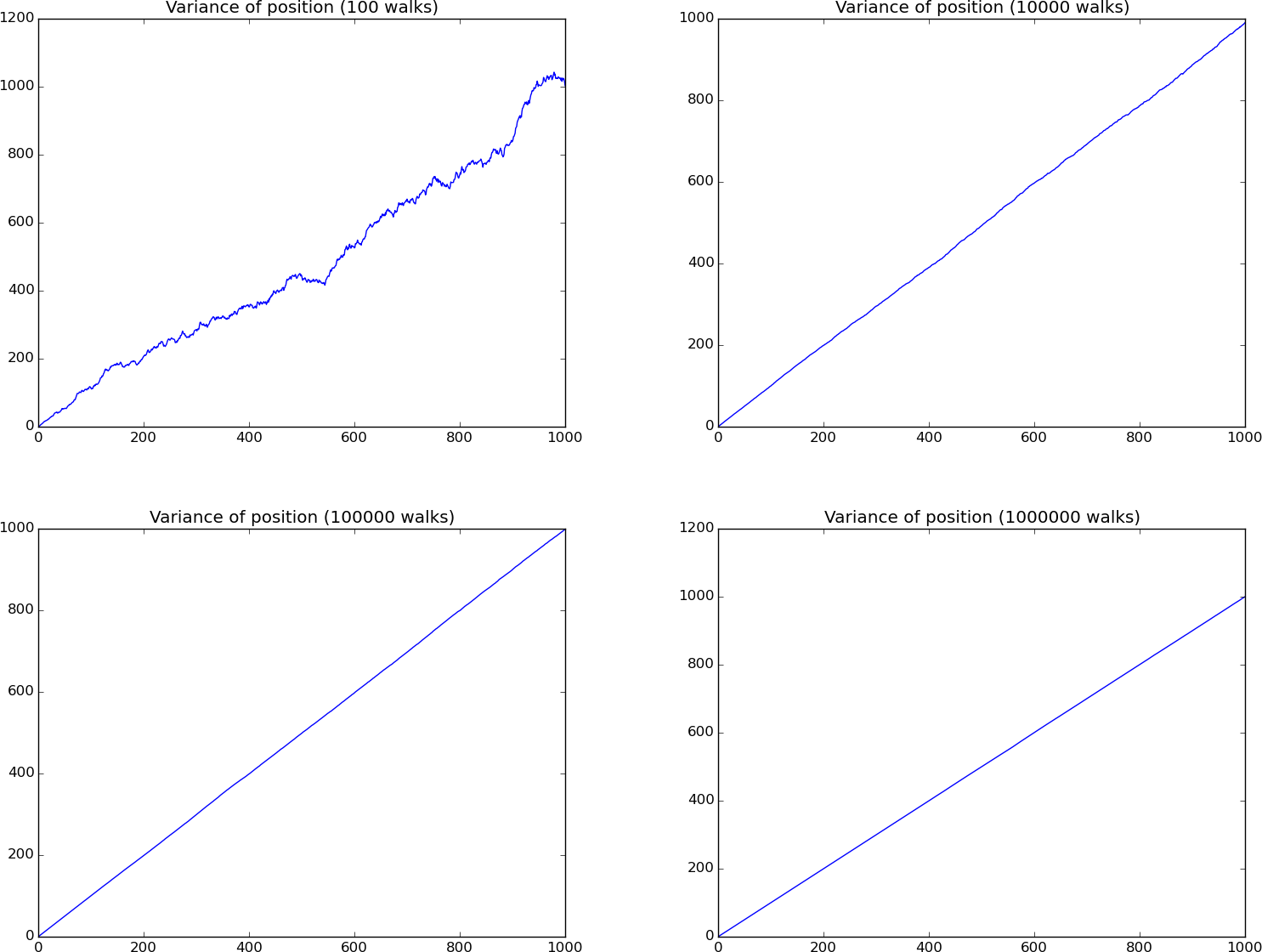

We can plot the various amplification factors against \(p=k\Delta x/2\) for different choices of the \(F\) parameter. Figures Amplification factors for large time steps, Amplification factors for time steps around the Forward Euler stability limit, and Amplification factors for small time steps show how long and small waves are damped by the various schemes compared to the exact damping. As long as all schemes are stable, the amplification factor is positive, except for Crank-Nicolson when \(F>0.5\).

Amplification factors for large time steps

Amplification factors for time steps around the Forward Euler stability limit

Amplification factors for small time steps

The effect of negative amplification factors is that \(A^n\) changes sign from one time level to the next, thereby giving rise to oscillations in time in an animation of the solution. We see from Figure Amplification factors for large time steps that for \(F=20\), waves with \(p\geq \pi/4\) undergo a damping close to \(-1\), which means that the amplitude does not decay and that the wave component jumps up and down (flips amplitude) in time. For \(F=2\) we have a damping of a factor of 0.5 from one time level to the next, which is very much smaller than the exact damping. Short waves will therefore fail to be effectively dampened. These waves will manifest themselves as high frequency oscillatory noise in the solution.

A value \(p=\pi/4\) corresponds to four mesh points per wave length of \(e^{ikx}\), while \(p=\pi/2\) implies only two points per wave length, which is the smallest number of points we can have to represent the wave on the mesh.

To demonstrate the oscillatory behavior of the Crank-Nicolson scheme, we choose an initial condition that leads to short waves with significant amplitude. A discontinuous \(I(x)\) will in particular serve this purpose: Figures Crank-Nicolson scheme for and Crank-Nicolson scheme for correspond to \(F=3\) and \(F=10\), respectively, and we see how short waves pollute the overall solution.

Analysis of the 2D diffusion equation¶

We first consider the 2D diffusion equation

\[u_{t} = {\alpha}(u_{xx} + u_{yy}),\]

which has Fourier component solutions of the form

\[u(x,y,t) = Ae^{-{\alpha} k^2t}e^{i(k_x x + k_yy)},\]

and the schemes have discrete versions of this Fourier component:

\[u^{n}_{q,r} = A\xi^{n}e^{i(k_x q\Delta x + k_y r\Delta y)}{\thinspace .}\]

The Forward Euler scheme¶

For the Forward Euler discretization,

\[[D_t^+u = {\alpha}(D_xD_x u + D_yD_y u)]_{i,j}^n,\]

we get

\[\frac{\xi - 1}{\Delta t} = -{\alpha}\frac{4}{\Delta x^2}\sin^2\left(\frac{k_x\Delta x}{2}\right) - {\alpha}\frac{4}{\Delta y^2}\sin^2\left(\frac{k_y\Delta y}{2}\right){\thinspace .}\]

Introducing

\[p_x = \frac{k_x\Delta x}{2},\quad p_y = \frac{k_y\Delta y}{2},\]

we can write the equation for \(\xi\) more compactly as

\[\frac{\xi - 1}{\Delta t} = -{\alpha}\frac{4}{\Delta x^2}\sin^2 p_x - {\alpha}\frac{4}{\Delta y^2}\sin^2 p_y,\]

and solve for \(\xi\):

\[\tag{411} \xi = 1 - 4F_x\sin^2 p_x - 4F_y\sin^2 p_y{\thinspace .}\]

The complete numerical solution for a wave component is

\[\tag{412} u^{n}_{q,r} = A(1 - 4F_x\sin^2 p_x - 4F_y\sin^2 p_y)^n e^{i(k_xp\Delta x + k_yq\Delta y)}{\thinspace .}\]

For stability we demand \(-1\leq\xi\leq 1\), and \(-1\leq\xi\) is the critical limit, since clearly \(\xi \leq 1\), and the worst case happens when the sines are at their maximum. The stability criterion becomes

\[\tag{413} F_x + F_y \leq \frac{1}{2}{\thinspace .}\]

For the special, yet common, case \(\Delta x=\Delta y=h\), the stability criterion can be written as

\[\Delta t \leq \frac{h^2}{2d{\alpha}},\]

where \(d\) is the number of space dimensions: \(d=1,2,3\).

The Backward Euler scheme¶

The Backward Euler method,

\[[D_t^-u = {\alpha}(D_xD_x u + D_yD_y u)]_{i,j}^n,\]

results in

\[1 - \xi^{-1} = - 4F_x \sin^2 p_x - 4F_y \sin^2 p_y,\]

and

\[\xi = (1 + 4F_x \sin^2 p_x + 4F_y \sin^2 p_y)^{-1},\]

which is always in \((0,1]\). The solution for a wave component becomes

\[\tag{414} u^{n}_{q,r} = A(1 + 4F_x\sin^2 p_x + 4F_y\sin^2 p_y)^{-n} e^{i(k_xq\Delta x + k_yr\Delta y)}{\thinspace .}\]

The Crank-Nicolson scheme¶

With a Crank-Nicolson discretization,

\[[D_tu]^{n+\frac{1}{2}}_{i,j} = \frac{1}{2} [{\alpha}(D_xD_x u + D_yD_y u)]_{i,j}^{n+1} + \frac{1}{2} [{\alpha}(D_xD_x u + D_yD_y u)]_{i,j}^n,\]

we have, after some algebra,

\[\xi = \frac{1 - 2(F_x\sin^2 p_x + F_x\sin^2p_y)}{1 + 2(F_x\sin^2 p_x + F_x\sin^2p_y)}{\thinspace .}\]

The fraction on the right-hand side is always less than 1, so stability in the sense of non-growing wave components is guaranteed for all physical and numerical parameters. However, the fraction can become negative and result in non-physical oscillations. This phenomenon happens when

\[F_x\sin^2 p_x + F_x\sin^2p_y > \frac{1}{2}{\thinspace .}\]

A criterion against non-physical oscillations is therefore

\[F_x + F_y \leq \frac{1}{2},\]

which is the same limit as the stability criterion for the Forward Euler scheme.

The exact discrete solution is

\[\tag{415} u^{n}_{q,r} = A \left( \frac{1 - 2(F_x\sin^2 p_x + F_x\sin^2p_y)}{1 + 2(F_x\sin^2 p_x + F_x\sin^2p_y)} \right)^n e^{i(k_xq\Delta x + k_yr\Delta y)}{\thinspace .}\]

Explanation of numerical artifacts¶

The behavior of the solution generated by Forward Euler discretization in time (and centered differences in space) is summarized at the end of the section Numerical experiments. Can we from the analysis above explain the behavior?

We may start by looking at Figure Forward Euler scheme for where \(F=0.51\). The figure shows that the solution is unstable and grows in time. The stability limit for such growth is \(F=0.5\) and since the \(F\) in this simulation is slightly larger, growth is unavoidable.

Figure Forward Euler scheme for has unexpected features: we would expect the solution of the diffusion equation to be smooth, but the graphs in Figure Forward Euler scheme for contain non-smooth noise. Turning to Figure Forward Euler scheme for , which has a quite similar initial condition, we see that the curves are indeed smooth. The problem with the results in Figure Forward Euler scheme for is that the initial condition is discontinuous. To represent it, we need a significant amplitude on the shortest waves in the mesh. However, for \(F=0.5\), the shortest wave (\(p=\pi/2\)) gives the amplitude in the numerical solution as \((1-4F)^n\), which oscillates between negative and positive values at subsequent time levels for \(F>\frac{1}{4}\). Since the shortest waves have visible amplitudes in the solution profile, the oscillations becomes visible. The smooth initial condition in Figure Forward Euler scheme for , on the other hand, leads to very small amplitudes of the shortest waves. That these waves then oscillate in a non-physical way for \(F=0.5\) is not a visible effect. The oscillations in time in the amplitude \((1-4F)^n\) disappear for \(F\leq\frac{1}{4}\), and that is why also the discontinuous initial condition always leads to smooth solutions in Figure Forward Euler scheme for , where \(F=\frac{1}{4}\).

Turning the attention to the Backward Euler scheme and the experiments in Figure Backward Euler scheme for , we see that even the discontinuous initial condition gives smooth solutions for \(F=0.5\) (and in fact all other \(F\) values). From the exact expression of the numerical amplitude, \((1 + 4F\sin^2p)^{-1}\), we realize that this factor can never flip between positive and negative values, and no instabilities can occur. The conclusion is that the Backward Euler scheme always produces smooth solutions. Also, the Backward Euler scheme guarantees that the solution cannot grow in time (unless we add a source term to the PDE, but that is meant to represent a physically relevant growth).

Finally, we have some small, strange artifacts when simulating the development of the initial plug profile with the Crank-Nicolson scheme, see Figure Crank-Nicolson scheme for , where \(F=3\). The Crank-Nicolson scheme cannot give growing amplitudes, but it may give oscillating amplitudes in time. The critical factor is \(1 - 2F\sin^2p\), which for the shortest waves (\(p=\pi/2\)) indicates a stability limit \(F=0.5\). With the discontinuous initial condition, we have enough amplitude on the shortest waves so their wrong behavior is visible, and this is what we see as small instabilities in Figure Crank-Nicolson scheme for . The only remedy is to lower the \(F\) value.

Exercises¶

Exercise 3.1: Explore symmetry in a 1D problem¶

This exercise simulates the exact solution (396). Suppose for simplicity that \(c=0\).

a) Formulate an initial-boundary value problem that has (396) as solution in the domain \([-L,L]\). Use the exact solution (396) as Dirichlet condition at the boundaries. Simulate the diffusion of the Gaussian peak. Observe that the solution is symmetric around \(x=0\).

b) Show from (396) that \(u_x(c,t)=0\). Since the solution is symmetric around \(x=c=0\), we can solve the numerical problem in half of the domain, using a symmetry boundary condition \(u_x=0\) at \(x=0\). Set up the initial-boundary value problem in this case. Simulate the diffusion problem in \([0,L]\) and compare with the solution in a).

Filename: diffu_symmetric_gaussian .

Exercise 3.2: Investigate approximation errors from a \(u_x=0\) boundary condition¶

We consider the problem solved in Exercise 3.1: Explore symmetry in a 1D problem part b). The boundary condition \(u_x(0,t)=0\) can be implemented in two ways: 1) by a standard symmetric finite difference \([D_{2x}u]_i^n=0\), or 2) by a one-sided difference \([D^+u=0]^n_i=0\). Investigate the effect of these two conditions on the convergence rate in space.

Hint. If you use a Forward Euler scheme, choose a discretization parameter \(h=\Delta t = \Delta x^2\) and assume the error goes like \(E\sim h^r\). The error in the scheme is \({\mathcal{O}(\Delta t,\Delta x^2)}\) so one should expect that the estimated \(r\) approaches 1. The question is if a one-sided difference approximation to \(u_x(0,t)=0\) destroys this convergence rate.

Filename: diffu_onesided_fd .

Exercise 3.3: Experiment with open boundary conditions in 1D¶

We address diffusion of a Gaussian function as in Exercise 3.1: Explore symmetry in a 1D problem, in the domain \([0,L]\), but now we shall explore different types of boundary conditions on \(x=L\). In real-life problems we do not know the exact solution on \(x=L\) and must use something simpler.

a) Imagine that we want to solve the problem numerically on \([0,L]\), with a symmetry boundary condition \(u_x=0\) at \(x=0\), but we do not know the exact solution and cannot of that reason assign a correct Dirichlet condition at \(x=L\). One idea is to simply set \(u(L,t)=0\) since this will be an accurate approximation before the diffused pulse reaches \(x=L\) and even thereafter it might be a satisfactory condition if the exact \(u\) has a small value. Let \({u_{\small\mbox{e}}}\) be the exact solution and let \(u\) be the solution of \(u_t={\alpha} u_{xx}\) with an initial Gaussian pulse and the boundary conditions \(u_x(0,t)=u(L,t)=0\). Derive a diffusion problem for the error \(e={u_{\small\mbox{e}}} - u\). Solve this problem numerically using an exact Dirichlet condition at \(x=L\). Animate the evolution of the error and make a curve plot of the error measure

\[E(t)=\sqrt{\frac{\int_0^L e^2dx}{\int_0^L udx}}{\thinspace .}\]

Is this a suitable error measure for the present problem?

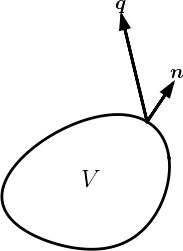

b) Instead of using \(u(L,t)=0\) as approximate boundary condition for letting the diffused Gaussian pulse move out of our finite domain, one may try \(u_x(L,t)=0\) since the solution for large \(t\) is quite flat. Argue that this condition gives a completely wrong asymptotic solution as \(t\rightarrow 0\). To do this, integrate the diffusion equation from \(0\) to \(L\), integrate \(u_{xx}\) by parts (or use Gauss' divergence theorem in 1D) to arrive at the important property

\[\frac{d}{dt}\int_{0}^L u(x,t)dx = 0,\]

implying that \(\int_0^Ludx\) must be constant in time, and therefore

\[\int_{0}^L u(x,t)dx = \int_{0}^LI(x)dx{\thinspace .}\]

The integral of the initial pulse is 1.

c) Another idea for an artificial boundary condition at \(x=L\) is to use a cooling law

\[\tag{416} -{\alpha} u_x = q(u - u_S),\]

where \(q\) is an unknown heat transfer coefficient and \(u_S\) is the surrounding temperature in the medium outside of \([0,L]\). (Note that arguing that \(u_S\) is approximately \(u(L,t)\) gives the \(u_x=0\) condition from the previous subexercise that is qualitatively wrong for large \(t\).) Develop a diffusion problem for the error in the solution using (416) as boundary condition. Assume one can take \(u_S=0\) "outside the domain" since \({u_{\small\mbox{e}}}\rightarrow 0\) as \(x\rightarrow\infty\). Find a function \(q=q(t)\) such that the exact solution obeys the condition (416). Test some constant values of \(q\) and animate how the corresponding error function behaves. Also compute \(E(t)\) curves as defined above.

Filename: diffu_open_BC .

Exercise 3.4: Simulate a diffused Gaussian peak in 2D/3D¶

a) Generalize (396) to multi dimensions by assuming that one-dimensional solutions can be multiplied to solve \(u_t = {\alpha}\nabla^2 u\). Set \(c=0\) such that the peak of the Gaussian is at the origin.

b) One can from the exact solution show that \(u_x=0\) on \(x=0\), \(u_y=0\) on \(y=0\), and \(u_z=0\) on \(z=0\). The approximately correct condition \(u=0\) can be set on the remaining boundaries (say \(x=L\), \(y=L\), \(z=L\)), cf. Exercise 3.3: Experiment with open boundary conditions in 1D. Simulate a 2D case and make an animation of the diffused Gaussian peak.

c) The formulation in b) makes use of symmetry of the solution such that we can solve the problem in the first quadrant (2D) or octant (3D) only. To check that the symmetry assumption is correct, formulate the problem without symmetry in a domain \([-L,L]\times [L,L]\) in 2D. Use \(u=0\) as approximately correct boundary condition. Simulate the same case as in b), but in a four times as large domain. Make an animation and compare it with the one in b).

Filename: diffu_symmetric_gaussian_2D .

Exercise 3.5: Examine stability of a diffusion model with a source term¶

Consider a diffusion equation with a linear \(u\) term:

\[u_t = {\alpha} u_{xx} + \beta u{\thinspace .}\]

a) Derive in detail the Forward Euler, Backward Euler, and Crank-Nicolson schemes for this type of diffusion model. Thereafter, formulate a \(\theta\)-rule to summarize the three schemes.

b) Assume a solution like (397) and find the relation between \(a\), \(k\), \({\alpha}\), and \(\beta\).

Hint. Insert (397) in the PDE problem.

c) Calculate the stability of the Forward Euler scheme. Design numerical experiments to confirm the results.

Hint. Insert the discrete counterpart to (397) in the numerical scheme. Run experiments at the stability limit and slightly above.

d) Repeat c) for the Backward Euler scheme.

e) Repeat c) for the Crank-Nicolson scheme.

f) How does the extra term \(bu\) impact the accuracy of the three schemes?

Hint. For analysis of the accuracy, compare the numerical and exact amplification factors, in graphs and/or by Taylor series expansion.

Filename: diffu_stability_uterm .

Diffusion in 2D¶

We now address a diffusion in two space dimensions:

\[\tag{436} \frac{\partial u}{\partial t} = {\alpha}\left( \frac{\partial^2 u}{\partial x^2} + \frac{\partial^2 u}{\partial x^2}\right) + f(x,y),\]

in a domain

\[(x,y)\in (0,L_x)\times (0,L_y),\ t\in (0,T],\]

with \(u=0\) on the boundary and \(u(x,y,0)=I(x,y)\) as initial condition.

Discretization¶

For generality, it is natural to use a \(\theta\)-rule for the time discretization. Standard, second-order accurate finite differences are used for the spatial derivatives. We sample the PDE at a space-time point \((i,j,n+\frac{1}{2})\) and apply the difference approximations:

\[\lbrack D_t u\rbrack^{n+\frac{1}{2}} = \theta \lbrack {\alpha} (D_xD_x u + D_yD_yu) + f\rbrack^{n+1} + \nonumber\]

\[\tag{437} \quad (1-\theta)\lbrack {\alpha} (D_xD_x u + D_yD_y u) + f\rbrack^{n}{\thinspace .}\]

Written out,

\[\frac{u^{n+1}_{i,j}-u^n_{i,j}}{\Delta t} =\nonumber\]

\[\qquad \theta ({\alpha} (\frac{u^{n+1}_{i-1,j} - 2^{n+1}_{i,j} + u^{n+1}_{i+1,j}}{\Delta x^2} + \frac{u^{n+1}_{i,j-1} - 2^{n+1}_{i,j} + u^{n+1}_{i,j+1}}{\Delta y^2}) + f^{n+1}_{i,j}) + \nonumber\]

\[\tag{438} \qquad (1-\theta)({\alpha} (\frac{u^{n}_{i-1,j} - 2^{n}_{i,j} + u^{n}_{i+1,j}}{\Delta x^2} + \frac{u^{n}_{i,j-1} - 2^{n}_{i,j} + u^{n}_{i,j+1}}{\Delta y^2}) + f^{n}_{i,j})\]

We collect the unknowns on the left-hand side

\[ u^{n+1}_{i,j} - \theta\left( F_x (u^{n+1}_{i-1,j} - 2^{n+1}_{i,j} + u^{n+1}_{i,j}) + F_y (u^{n+1}_{i,j-1} - 2^{n+1}_{i,j} + u^{n+1}_{i,j+1})\right) = \nonumber\]

\[\qquad (1-\theta)\left( F_x (u^{n}_{i-1,j} - 2^{n}_{i,j} + u^{n}_{i,j}) + F_y (u^{n}_{i,j-1} - 2^{n}_{i,j} + u^{n}_{i,j+1})\right) + \nonumber\]

\[\tag{439} \qquad \theta \Delta t f^{n+1}_{i,j} + (1-\theta) \Delta t f^{n}_{i,j} + u^n_{i,j},\]

where

\[F_x = \frac{{\alpha}\Delta t}{\Delta x^2},\quad F_y = \frac{{\alpha}\Delta t}{\Delta y^2},\]

are the Fourier numbers in \(x\) and \(y\) direction, respectively.

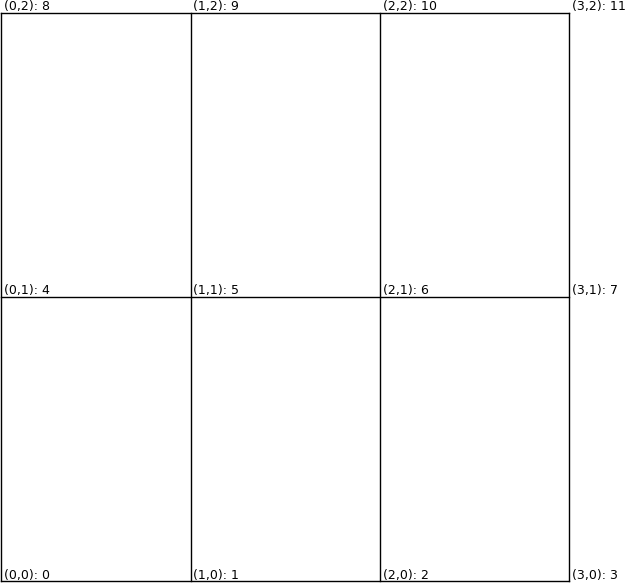

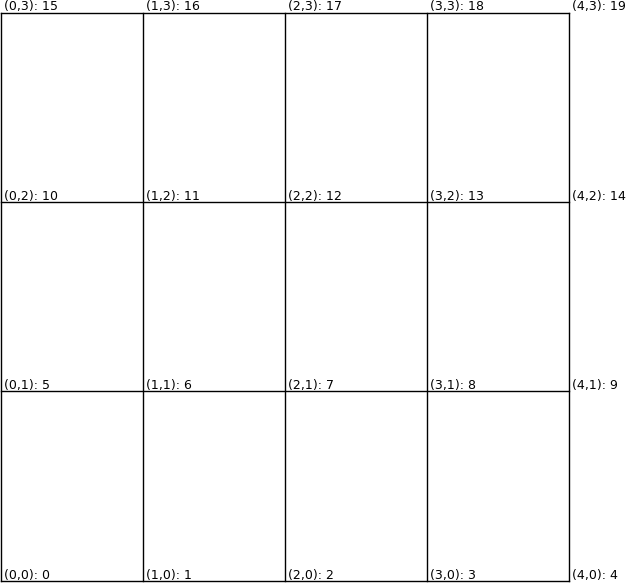

3x2 2D mesh

Numbering of mesh points versus equations and unknowns¶

The equations (439) are coupled at the new time level \(n+1\). That is, we must solve a system of (linear) algebraic equations, which we will write as \(Ac=b\), where \(A\) is the coefficient matrix, \(c\) is the vector of unknowns, and \(b\) is the right-hand side.

Let us examine the equations in \(Ac=b\) on a mesh with \(N_x=3\) and \(N_y=2\) cells in each direction. The spatial mesh is depicted in Figure 3x2 2D mesh. The equations at the boundary just implement the boundary condition \(u=0\):

\[\begin{split}\begin{align*} & u^{n+1}_{0,0}= u^{n+1}_{1,0}= u^{n+1}_{2,0}= u^{n+1}_{3,0}= u^{n+1}_{0,1}=\\ & u^{n+1}_{3,1}= u^{n+1}_{0,2}= u^{n+1}_{1,2}= u^{n+1}_{2,2}= u^{n+1}_{3,2}= 0{\thinspace .} \end{align*}\end{split}\]

We are left with two interior points, with \(i=1\), \(j=1\) and \(i=2\), \(j=1\). The corresponding equations are

\[\begin{split}\begin{align*} & u^{n+1}_{i,j} - \theta\left( F_x (u^{n+1}_{i-1,j} - 2^{n+1}_{i,j} + u^{n+1}_{i,j}) + F_y (u^{n+1}_{i,j-1} - 2^{n+1}_{i,j} + u^{n+1}_{i,j+1})\right) = \\ &\qquad (1-\theta)\left( F_x (u^{n}_{i-1,j} - 2^{n}_{i,j} + u^{n}_{i,j}) + F_y (u^{n}_{i,j-1} - 2^{n}_{i,j} + u^{n}_{i,j+1})\right) + \\ &\qquad \theta \Delta t f^{n+1}_{i,j} + (1-\theta) \Delta t f^{n}_{i,j} + u^n_{i,j}, \end{align*}\end{split}\]

There are in total 12 unknowns \(u^{n+1}_{i,j}\) for \(i=0,1,2,3\) and \(j=0,1,2\). To solve the equations, we need to form a matrix system \(Ac=b\). In that system, the solution vector \(c\) can only have one index. Thus, we need a numbering of the unknowns with one index, not two as used in the mesh. We introduce a mapping \(m(i,j)\) from a mesh point with indices \((i,j)\) to the corresponding unknown \(p\) in the equation system:

\[p = m(i,j) = j(N_x+1) + i{\thinspace .}\]

When \(i\) and \(j\) run through their values, we see the following mapping to \(p\):

\[\begin{split}\begin{align*} &(0,0)\rightarrow 0,\ (0,1)\rightarrow 1,\ (0,2)\rightarrow 2,\ (0,3)\rightarrow 3,\\ &(1,0)\rightarrow 4,\ (1,1)\rightarrow 5,\ (1,2)\rightarrow 6,\ (1,3)\rightarrow 7,\\ &(2,0)\rightarrow 8,\ (2,1)\rightarrow 9,\ (2,2)\rightarrow 10,\ (2,3)\rightarrow 11{\thinspace .} \end{align*}\end{split}\]

That is, we number the points along the \(x\) axis, starting with \(y=0\), and then progress one "horizontal" mesh line at a time. In Figure 3x2 2D mesh you can see that the \((i,j)\) and the corresponding single index (\(p\)) are listed for each mesh point.

We could equally well have numbered the equations in other ways, e.g., let the \(j\) index be the fastest varying index: \(p = m(i,j) = i(N_y+1) + j\).